AI Services

The AI Services module in Amorphic offers administrators a unified interface to discover, configure, and manage Large Language Models (LLMs) and other generative AI capabilities available within the platform.

This section of the platform provides the following key functionalities:

-

Manage AI Services: Enable or disable AI features across different Amorphic services.

-

AI Models: Administer all available AI models, including configuration, usage assignments, and access control.

-

RAG Engine: Monitor and analyze RAG (Retrieval-Augmented Generation) engine performance, database status, and usage metrics.

Manage AI Services

The Manage AI services section allows users to selectively enable/disable AI functionalities across different Amorphic services. Currently, AI functionality is supported for the following services:

- AI Space: This is a consolidation of all completely AI powered components. This includes Knowledgebases, Agents and Chats. Once enabled it allows users to build and consume Knowledgebases and Agents within the platform.

- Datasets: This enables users to use AI to generate better insights in their data using the data profiling functionality as well as generate datasets from sample files.

- DataPipelines: This enables the AI powered LLM and Summarization nodes in Data Pipelines.

- SQL AI: This lets users consume the SQL AI agent within the playground

- Jobs: This enables users to consume AI models within their ETL job scripts

- Datalabs: This enables users to consume AI models within their datalab notebooks and studios

AI Models

The AI Models panel gives administrators full control over every Large-Language-Model that is available to end-users inside Amorphic.

What you can do

- Sync Models From AWS – pull the latest catalogue of models, including new releases, retired versions and other changes.

- List All Available Models - list all models synced to Amorphic.

- Enable / Disable – toggle whether a model can be consumed by users within the platform.

- View Model Details - view model details and capabilities

- Assign Models for services - assign models for use across different Amorphic services.

Sync Models From AWS

The Sync Models button allows users to sync the latest models from AWS into Amorphic. This will update all changes in the capabilities of existing models as well.

When models become legacy or reach end-of-life, users receive email notifications to update their pipelines accordingly. End-of-life models will be automatically disabled, which may cause dependent functionalities to break if no action is taken. Therefore, prompt action is required from the user side to prevent any disruptions to their pipelines.

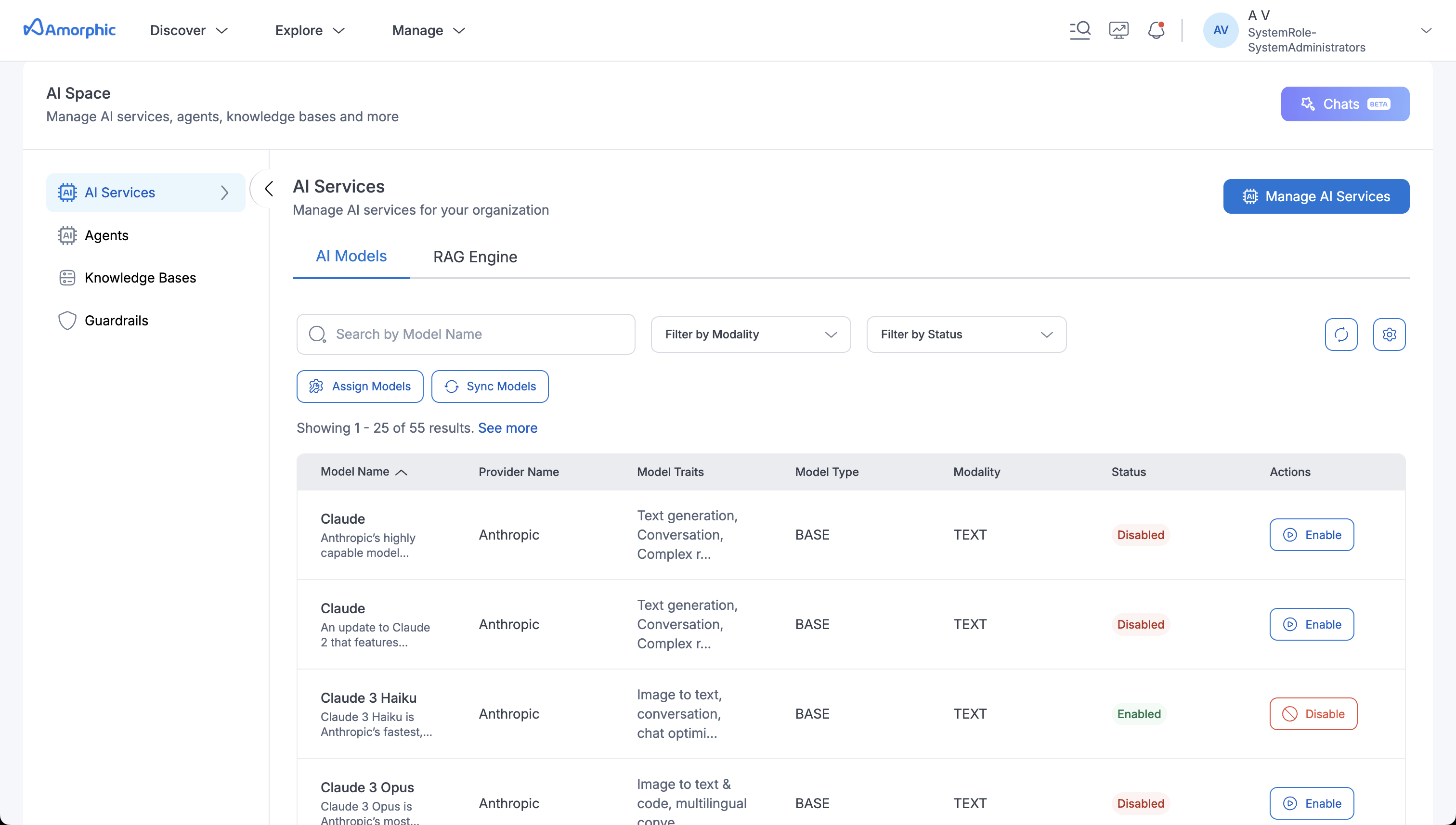

List All Available Models

The models listing displays the following

| Column | Description |

|---|---|

| Model Name | Human-readable model name (e.g. Claude 3 Sonnet). |

| Model Provider | The Bedrock provider / family. |

| Model Traits | The model's traits. |

| Model Type | Currently only BASE models are supported |

| Modality | The output modalities supported for the model. |

| Status | Enabled, Disabled or Deprecated |

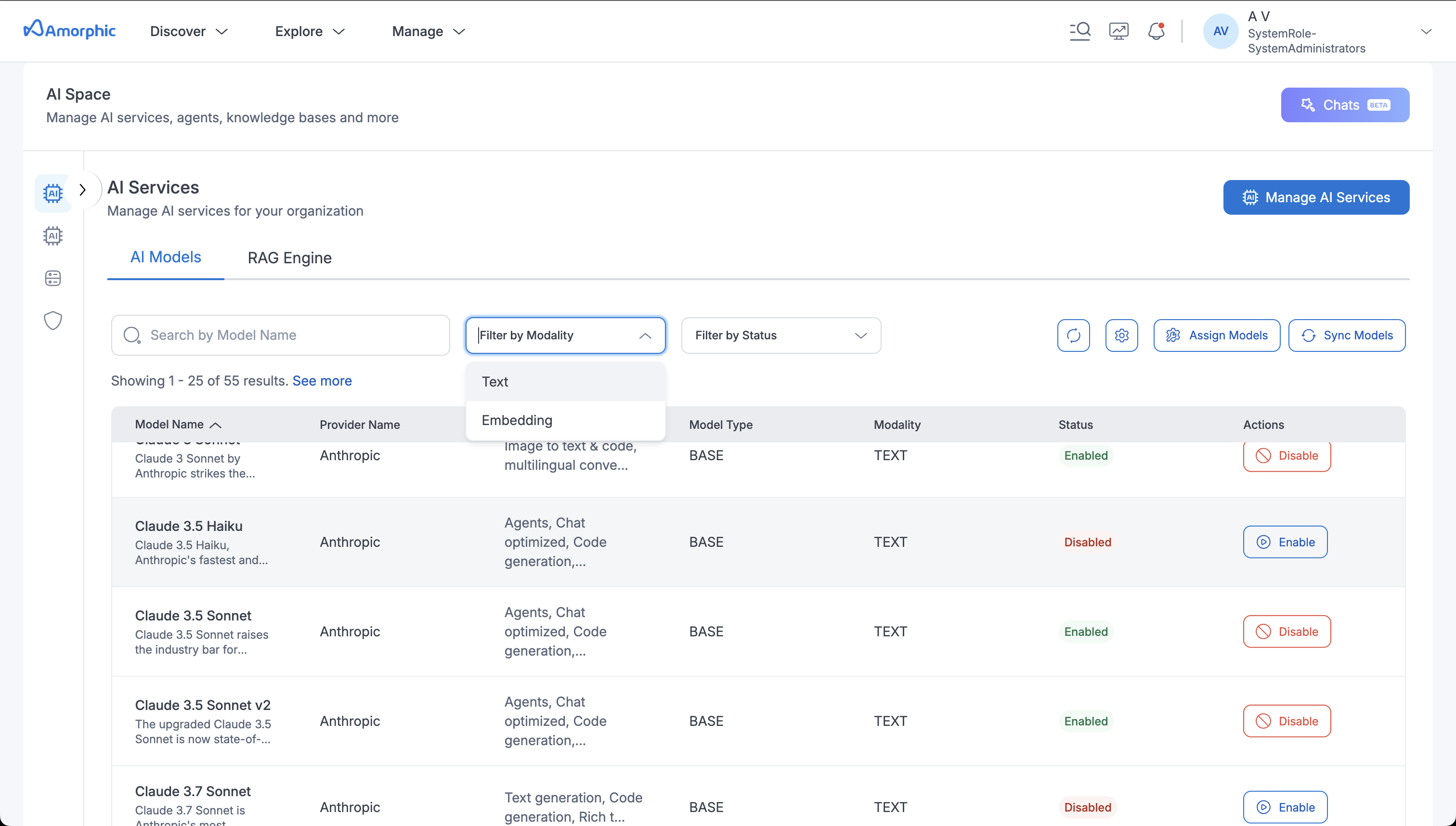

Use the search bar above the table to quickly find a model by name. Users can also filter models based on Modality and Status. Users can also enable/disable models from this page.

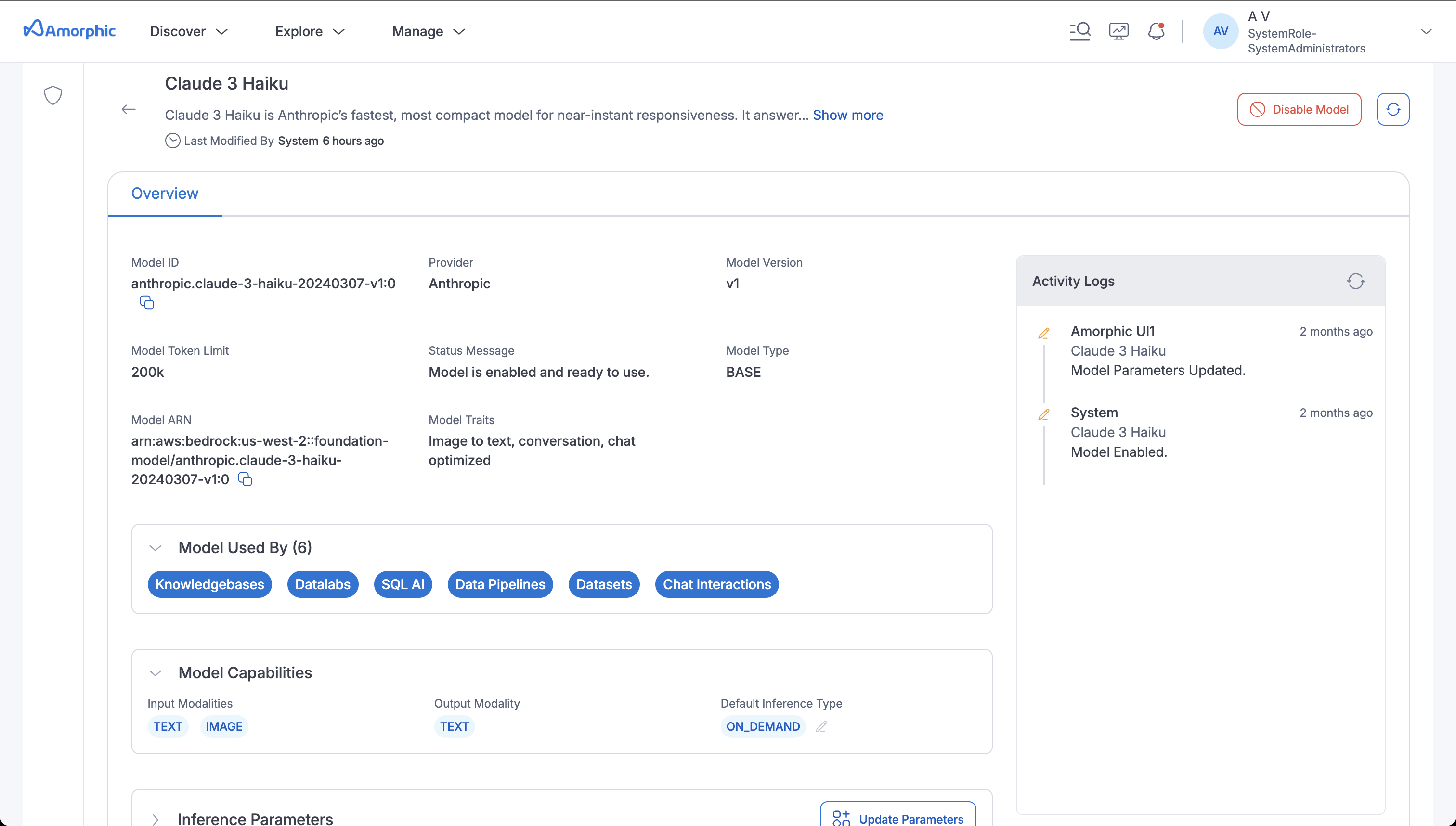

Model Details

Clicking on any model will open up the model details page. This will show you the following

- Details about the model including its version, token limit, model ARN.

- The Model Used by section lists the Amorphic services the model has been assigned for use.

- The Model Capabilities section shows the supported input and output modalities for the model.

- The Inference Parameters section shows the configured parameters for the model as well as lets you update them if required.

- The Activity logs section captures all enable/disable actions performed on the model as well as any inference parameter updates.

Enable / Disable Models

Users can enable/disable models for use here. Only enabled models can be consumed across different services. When disabling a model, it has to be unassigned from all of the services it is assigned to. When you try to enable a model and your AWS account doesn't have access it will say so. You need to ask your AWS administrator to get these models for you.

Assign Models for services

This allows users to configure models across their services: Knowledgebases, Agents, Datasets, Data Pipelines, SQL AI, Jobs and Datalabs. Only once assigned do these models become available for consumption from within the services. Only enabled models can be assigned for service use.

RAG Engine

The RAG Engine section provides comprehensive monitoring and management capabilities for your Retrieval-Augmented Generation infrastructure. The RAG engine is powered by Aurora PostgreSQL, which provides high-performance vector search capabilities, automatic scaling, and enterprise-grade reliability for handling complex AI workloads. This interface allows administrators to track engine performance, monitor cluster health, and analyze usage metrics.

Engine Status and Details

The RAG Engine displays the current status along with key infrastructure information:

- Engine Name: The unique identifier for your RAG engine instance

- Version: Current version of the RAG engine (e.g., 17.5)

- Engine Mode: Shows the operational mode (e.g., provisioned)

- Port: The port number for engine connectivity (e.g., 5432)

Monitoring and Metrics

The RAG Engine interface includes real-time monitoring capabilities with key performance indicators displayed at the bottom of the interface. These metrics help administrators:

- Monitor engine performance and health

- Track usage patterns and resource utilization

- Identify potential issues or bottlenecks

- Make informed decisions about scaling and optimization