Guardrails

Guardrails are regulatory entities that can be used to disallow certain undesirable responses from being returned to the user from AI services. They can be attached to agents, knowledge bases, and chats to filter out undesirable responses.

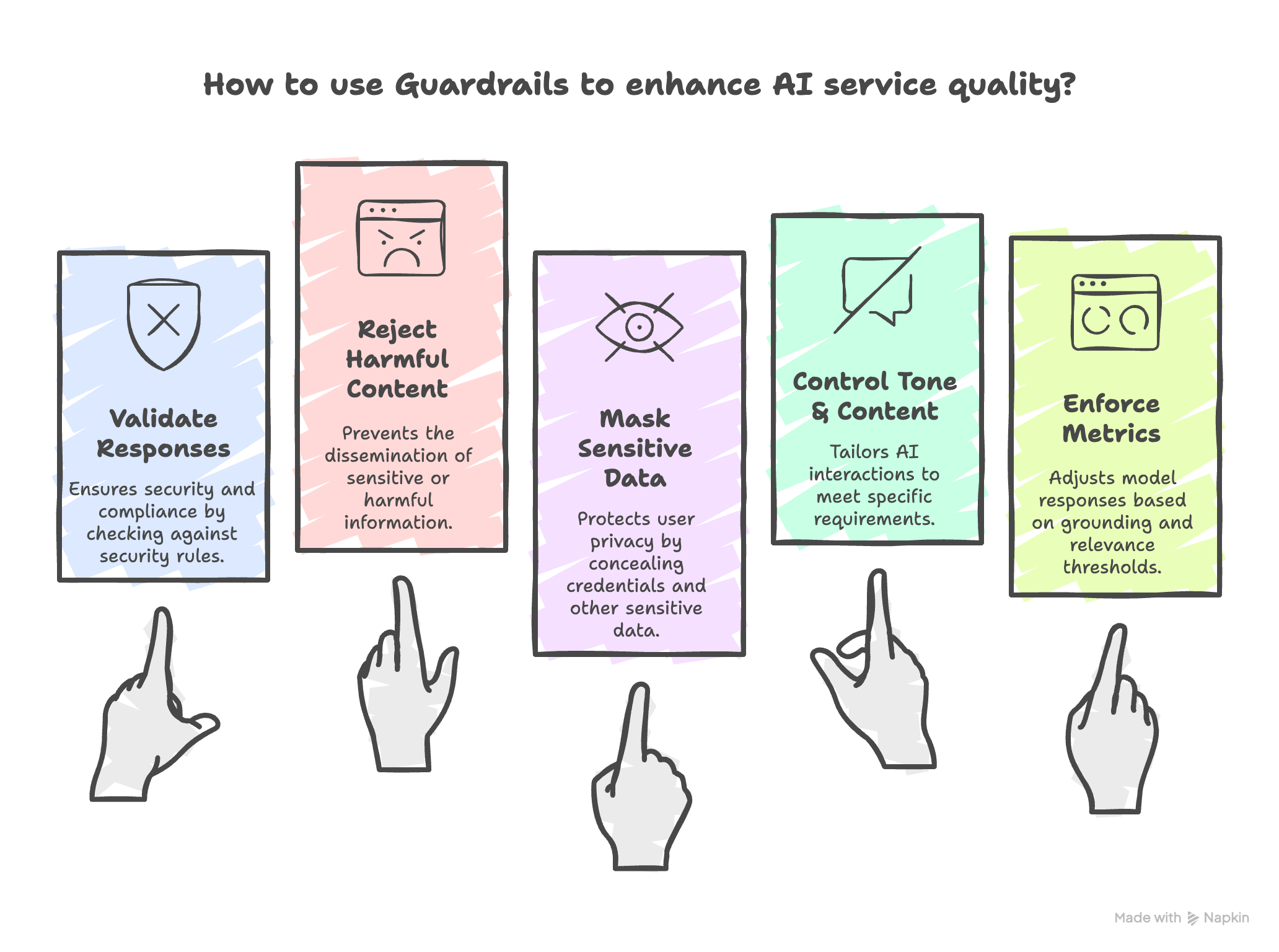

With Guardrails, you can:

- Validate model responses against security rules

- Reject responses that contain sensitive or harmful content

- Mask sensitive content such as credentials in the model responses

- Control the tone and content of AI interactions

- Enforce thresholds on metrics such as grounding and relevance to tailor model responses to your requirements

Guardrail Operations

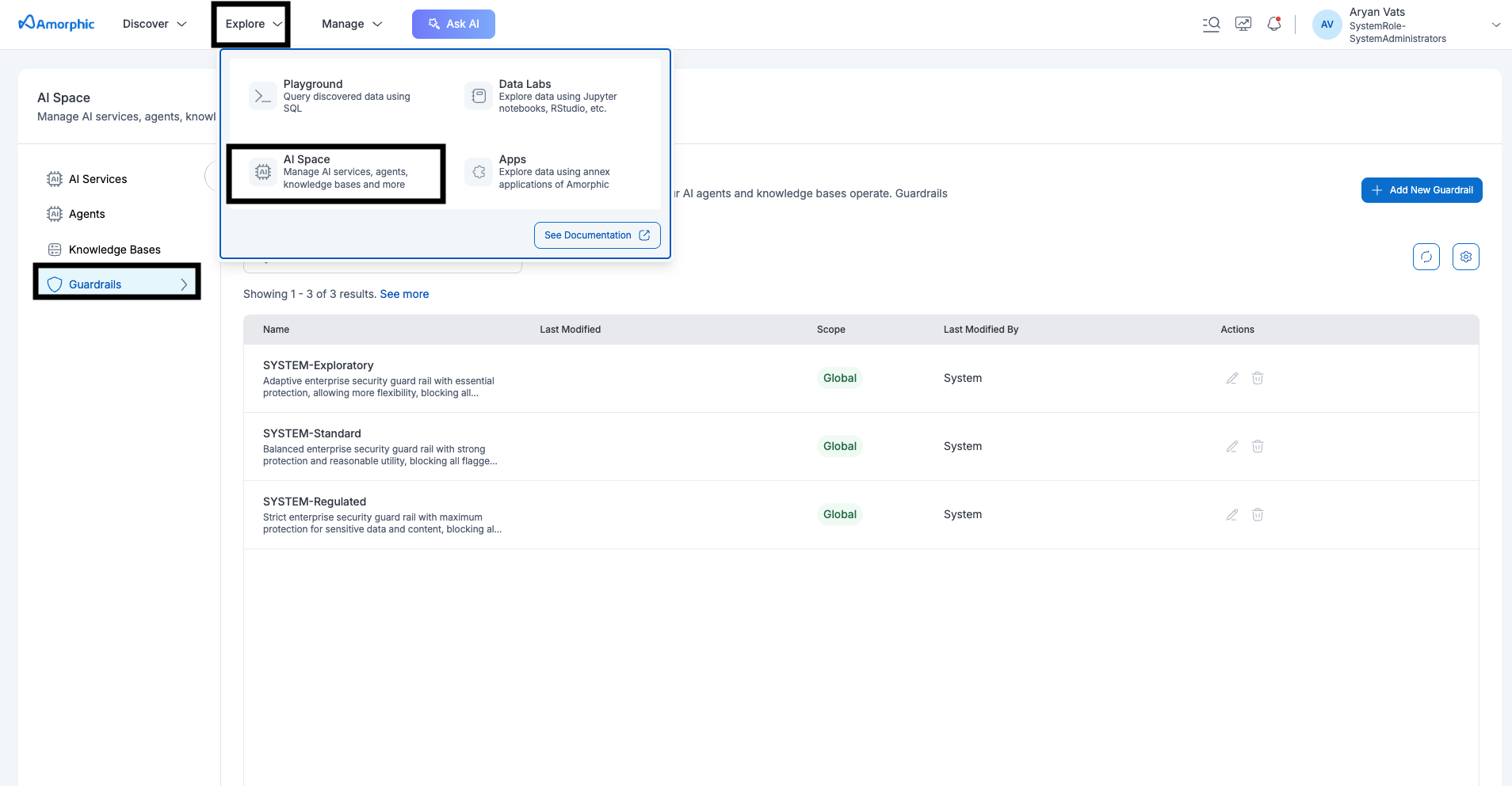

Guardrails can be found under the AI Space of Amorphic. Upon landing on the guardrails page, users will be able to view both system-defined and user-created guardrails.

Furthermore, Amorphic Guardrails support the following operations:

- Create Guardrail: Create a new guardrail with custom filtering criteria

- View Guardrail: View the guardrail details and its filtering criteria

- Edit Guardrail: Edit an existing guardrail to update its rules or metadata

Create Guardrail

- Go to Explore > AI Space > Guardrails

- Click Add New Guardrail

- Fill in the following information under the "Define Your Guardrail" section:

| Attribute | Description |

|---|---|

| Guardrail Name | Choose a descriptive name (e.g., "Politics-Guardrail"). |

| Description | A brief explanation of what the guardrail does (e.g., "Guardrail to prevent expression of political opinions"). |

| Scope | Select either Global (accessible across all users in your organization) or Private (for personal use only). |

| Message for blocked prompts | Enter a message to display when the guardrail blocks a user prompt. |

| Enable cross-region inference for your guardrail | Toggle this option on or off. |

| Content Filter Tier | Select a tier, such as Classic or Standard, which provides different levels of accuracy and language coverage. |

- Configure additional filters from the left-hand navigation:

| Filter Type | Description |

|---|---|

| Content Filters | Toggle specific harmful categories (e.g., Hate, Sexual, Violence, Insults, Misconduct, Prompt_attack) and set a strength level (None, Low, Medium, High). Higher strength is stricter. |

| Denied Topics | Add specific topics that the agent should avoid engaging with. For each topic, provide a name, a definition, and optional sample phrases. |

| Add Word Filters |

|

| Add Sensitive Information Filters |

|

| Add Contextual Grounding Checks | Configure grounding and relevance thresholds to ensure the model's response is factually grounded and relevant to the user's query. |

- Click Create Guardrail

- Guardrail creation is instantaneous.

- The filtering criteria can be defined using regular expressions, keywords, or predefined system filters to govern content.

- Guardrails can be attached to Agents, Chats, and Knowledge Bases.

- In case a guardrail is not specified for one of these supported AI resources, the SYSTEM-Regulated guardrail will be applied to them.

View Guardrail

To view a guardrail's details, click on its name from the guardrails listing page. The Guardrail Details page provides a comprehensive look at all its configurations.

This page is divided into two main tabs: Overview and Resources Attached.

Overview This section displays all the core settings of the guardrail. You can see:

| Setting | Description |

|---|---|

| Version | The current version of the guardrail (e.g., DRAFT). |

| Tier | The content filter tier selected during creation (e.g., CLASSIC). |

| Scope | Whether the guardrail is Global or Private. |

| Blocked Message | The custom message that will be displayed to the user when their prompt is blocked. |

| Content Filters | A list of harmful categories (Hate, Sexual, Violence, Insults, Misconduct, Prompt_attack) and their configured strength levels (e.g., LOW, MEDIUM, NONE). |

| Denied Topics | Lists specific topics that are disallowed, along with their definitions and sample phrases. |

| Blocked Words | Shows the custom list of words that are blocked (e.g., "Communism", "Marxism"). |

| Sensitive Information Filters | Displays any configured PII types (e.g., MAC_ADDRESS) and custom regex patterns. |

Resources Attached This tab shows which AI resources (Agents, Knowledge Bases) are currently using this guardrail.

On the top right of the details page, you can also use the Test Guardrail button to test the guardrail's functionality with a sample input. Additionally, the Activity Logs panel on the right provides a history of recent actions, such as when the guardrail was created, modified.

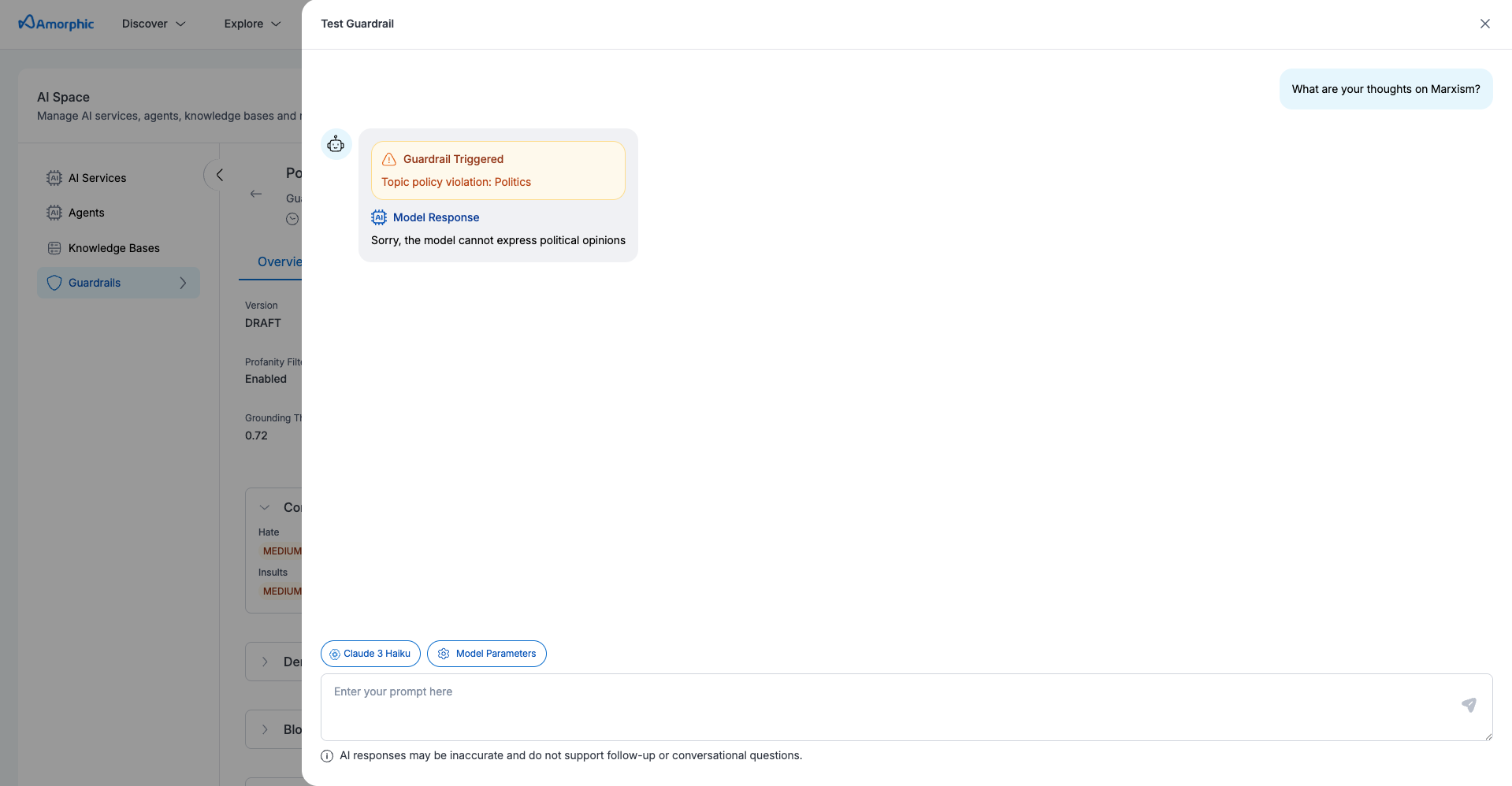

Test Guardrail

Under the guardrail's view page, The Test Guardrail feature lets you verify if a guardrail is blocking undesirable responses as intended. You can use it to input sample text and confirm whether the guardrail successfully filters or blocks the content according to its configuration. This is a crucial step for validating a guardrail's functionality before beginning active usage.

Edit Guardrail

Users can edit a guardrail to update its existing metadata and filtering criteria. To do so, navigate to the Guardrail Details page and click on the "edit" option (three dots in the top corner).

Here's what you can edit:

| Editable Setting | Description |

|---|---|

| Description | Change the brief explanation of what the guardrail does. |

| Message for blocked prompts | Update the custom message that is displayed when the guardrail blocks a user prompt. |

| Cross-Region Inference | Toggle the option to enable or disable cross-region inference for the guardrail. |

| Content Filters | Modify the strength levels (e.g., Low, Medium, High) for harmful categories like Hate, Sexual, and Violence. |

| Denied Topics | Add new topics, edit existing definitions and sample phrases, or remove topics. |

| Word Filters | Add or remove words from the custom list of blocked words. |

| Sensitive Information Filters | Add new filters for PII types or custom regex patterns, and edit or remove existing ones. |

| Contextual Grounding Checks | Adjust the grounding and relevance thresholds. |

What you cannot edit:

| Non-Editable Setting | Reason |

|---|---|

| Guardrail Name | The name is permanent once the guardrail is created. |

| Scope | You cannot change a guardrail from Global to Private or vice-versa. |

| Content Filter Tier | The tier (Classic or Standard) cannot be changed. If you need a different tier, you must create a new guardrail. |

System Guardrails

Amorphic provides a set of pre-baked guardrails that are managed by the system. These guardrails are read-only and cannot be modified or deleted by users. They are designed to provide a baseline for security and governance and are available to all users by default.

Currently, there are three System Guardrails that are readily available out of the box, in descending order of strictness:

- SYSTEM-Regulated: Strict enterprise security guard rail with maximum protection for sensitive data and content, blocking all flagged information.

- SYSTEM-Standard: Balanced enterprise security guard rail with strong protection and reasonable utility, blocking all flagged information.

- SYSTEM-Exploratory: Adaptive enterprise security guard rail with essential protection, allowing more flexibility, blocking all flagged information.

System Guardrails are system-defined and cannot be modified or deleted.

Example Use Cases

Politics Guardrail

Create a guardrail to prevent an AI application from expressing political opinions:

- Guardrail Name: Politics-Guardrail

- Description: Guardrail to prevent expression of political opinions

- Denied Topics:

- Topic Name: Politics

- Topic Definition: Any political discussion not about objective facts

- Sample Phrases: "Express your views on the communist party of China"

- Blocked Words: "Communism", "Marxism"

- Blocked Message: "Sorry, the model cannot express political opinions"