Redshift Datasets

Redshift datasets allow users to create datasets in Amorphic utilizing the power of AWS Redshift. Amorphic enables users to store CSV, TSV, XLSX and Parquet files in Amazon S3 with Redshift as the target location. This feature includes optional partial data validation, which is enabled by default. The validation process helps detect and correct corrupt or invalid data files while supporting a variety of data types, including:

-

Strings/VARCHAR

-

Integers

-

Double

-

Boolean

-

Date

-

Timestamp

-

Complex data structures

The AWS Athena CSV Parser/SerDe has the following limitations:

- Embedded line breaks in CSV files are not supported.

- Empty fields in columns defined as a numeric data type are not supported.

As a workaround, Users can import them as string columns and create views on top of it by casting them to the required data types.

How to Create Redshift Datasets?

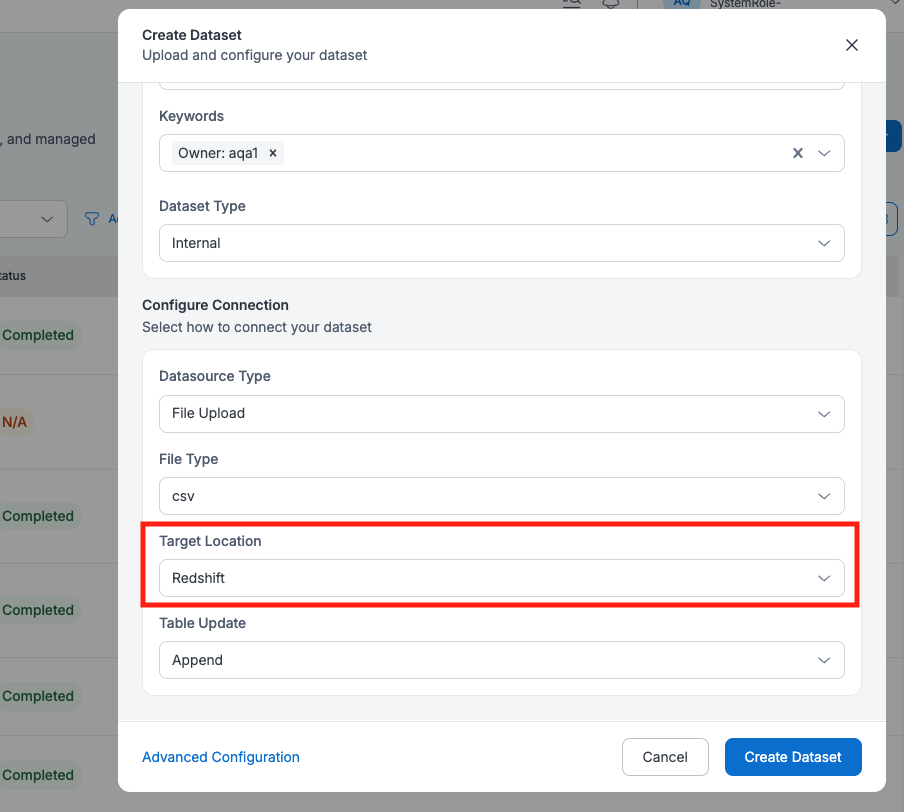

Users can create new datasets with Redshift as target location. Currently, only structured data with CSV, TSV, XLSX, and Parquet file formats are supported. Dataset can be created by using either of the three ways:

- Using already defined Redshift Datasets Templates

- Importing required JSON payload

- Using the form and entering the required details

Update method can be one of the following:

- Append: With this update method, data will be appended to the existing data.

- Reload: With this update method, data will be reloaded to the dataset. The following two options are exclusive for the Reload type of dataset.

- TargetTablePrepMode

- recreate With this mode, existing table and data will be deleted and the new data will be loaded in a new table.

- truncate With this mode, only existing data will be deleted and the new data will be loaded in the same table.

- Based on the above reload settings, data reload process times can vary.

- User can also set the optional field

Skip Trashas Yes to delete the old data permanently instead of moving it to trash during the data reload process.

- TargetTablePrepMode

- Latest Record this update method allows querying latest data based on the

Record KeysandLatest Record Indicatordefined.

Redshift Datasets Permissions

- Users with owner and editor access on Redshift dataset has

USAGEandCREATEpermissions on the Redshift cluster schema (Domain), as well as'SELECT', 'INSERT', 'DELETE', 'UPDATE'permissions on the Redshift cluster table (Dataset). - User with read-only access on Redshift datasets have

USAGEpermission on the Redshift cluster schema (Domain), as well asSELECTpermission on the Redshift cluster table (Dataset).

Redshift Column/Table Constraints and Attributes Support

Users can create Redshift datasets with more table/column constraints and attributes. To know more, refer AWS Redshift Create Table documentation.

-

Table Constraints:

- UNIQUE : Specifies that the column can contain only unique values.

- PRIMARY KEY : Specifies that the column is the primary key for the table.

infoNote Unique and Primary key constraints are informational only and they aren't enforced by the Redshift. Redshift automatically convert columns that defined as

PRIMARY KEYtoNOT NULLby default. -

Column Attributes:

- IDENTITY : Specifies that the column is an IDENTITY column. To add identity column, user need to provide values for Identity Column Name, Seed and Step Values.

-

Column Constraints:

- NOT NULL : Specifies that the column isn't allowed to contain null values.

-

Sort Key: Determines the order in which rows in a table are stored. To know more, refer Redshift Sort Keys

- Compound (Need to specify the Sort Keys)

- Interleaved (Need to specify the Sort Keys)

- None (default option)

-

Distribution Type: Determines how the data will be distributed in the redshift cluster. To know more, refer Redshift Distribution Styles

- Auto (default option)

- Even

- Key Based (Need to specify the Distribution Key)

- Distribute All Keys

From Amorphic 2.3 onwards, Users can create Redshift datasets with additional datatypes such as super and varbyte.

Users can utilize the varbinary column type also to store variable-length binary values with a fixed limit starting from Amorphic version 2.5

-

Super:

- This can store any semistructured data or values.

- The given values must be appropriately serialized to JSON inorder to be stored properly in the backend.

- User cannot define Super columns as either a distribution or sort key. For more limitations, refer AWS Redshift Super datatype limitations

-

Varbyte:

- This supports storing binary values.

- CSV, TSV and XLSX treats the given column values in the file as hexadecimal and converts them to binary while storing it in the backend. However it will display the values only in hexadecimal format.

- For Parquet datatype, data needs to ingested properly for varbyte column by properly converting into hexadecimal. Incorrect format results in an error or value being converted to hexadecimal wrongly and captured in the backend.

- User cannot define Varbyte columns as sort key for interleaved sort type.

-

Varbinary:

- This is one of the aliases for the

varbytedata type

- This is one of the aliases for the

Redshift Data Conversion Parameters Support

With the release of version 1.14, users will be able to create Redshift datasets with Data Conversion Parameters. When data is loaded into Redshift, Amorphic will implicitly convert strings in the source data to the data type of the target column.

Data Conversion Parameters can be used to specify a conversion that is different from the default behavior, or if the default conversion results in errors when data is loaded into the Redshift database. For more information, please refer to the AWS Data Conversion Parameters documentation.

Below are different Data Conversion Parameters that are supported:

'ACCEPTANYDATE', 'ACCEPTINVCHARS', 'BLANKSASNULL', 'DATEFORMAT', 'EMPTYASNULL', 'ENCODING', 'ESCAPE', 'EXPLICIT_IDS', 'FILLRECORD', 'IGNOREBLANKLINES', 'NULL', 'REMOVEQUOTES', 'ROUNDEC', 'TIMEFORMAT', 'TRIMBLANKS', 'TRUNCATECOLUMNS'

For data conversion parameters DATEFORMAT, TIMEFORMAT and NULL, the user must provide a valid argument. Arguments for ENCODING and ACCEPTINVCHARS are optional. No arguments are required for all other data conversion parameters.

Users can find data conversion parameters for Redshift datasets in the Profile section. The GIF below shows how to add or edit the data conversion parameters for a Redshift dataset.

For Redshift use cases with a large number of incoming files, the user should turn ON dataload throttling and set a maximum limit of 90 for redshift.