JDBC Bulk data load

To perform Bulk data migration, users need to create dataflow within the JDBC datasource. A bulk data load dataflow in Amorphic can be set up using the "Add Dataflow" option, which is available on the Datasource Details page under the "Dataflow" tab.

As part of Amorphic bulk data load, datasets are not required to be created already as a pre-requisite. When user creates a dataflow then datasets will be created as part of the dataflow and will be used for data ingestion. The user needs to toggle the 'CreateDataset' option during dataflow creation. These datasets are tightly coupled with the dataflow and cannot be used for normal data upload either from UI or any other process.

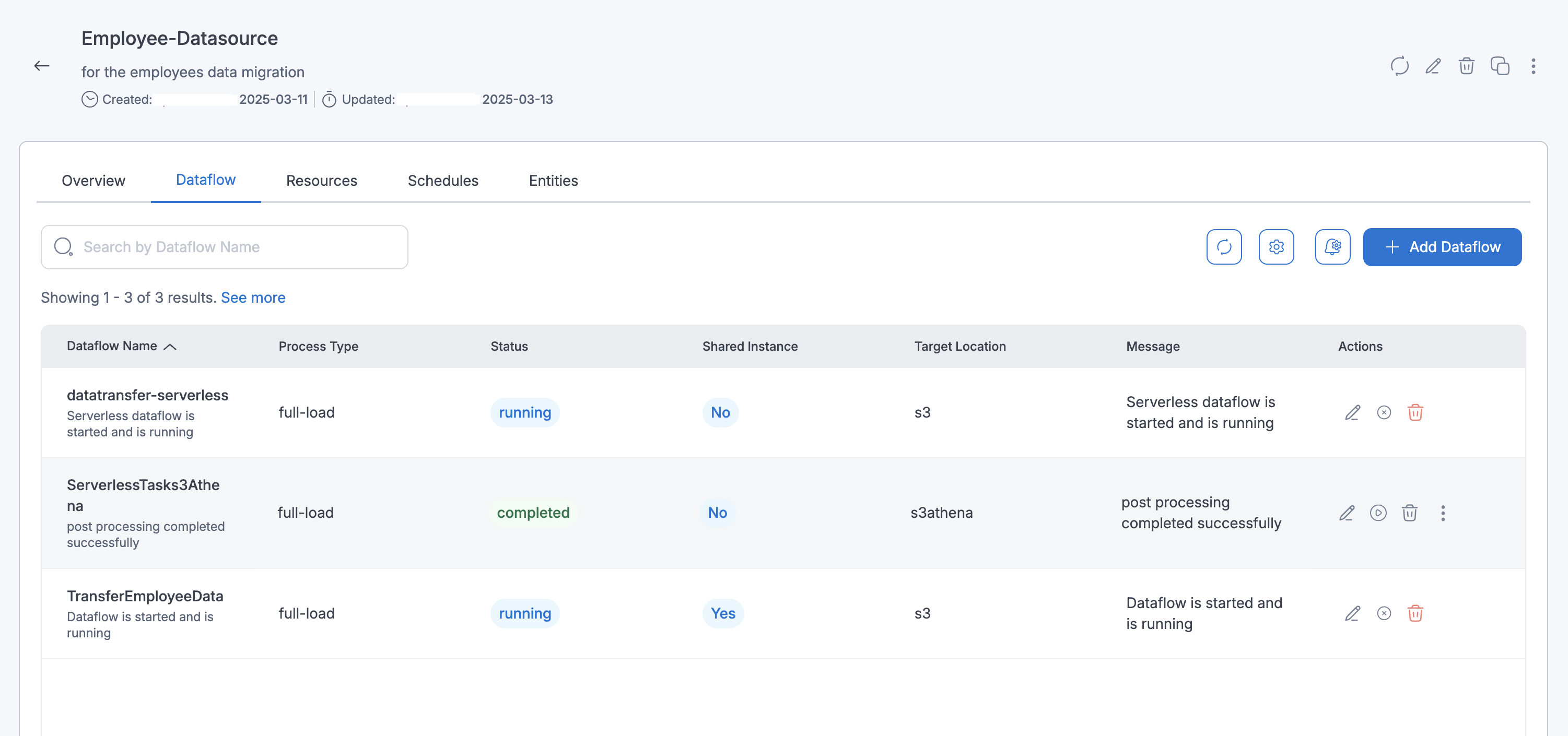

The following picture depicts the dataflows page in Amorphic

Dataflows

Dataflow refers to a unit of work that migrates data from a source database to a target database. There are two types of dataflows:

- Those with on-demand instances

- Serverless replication

The steps for creating both types of dataflows are outlined below.

How to create a Dataflow

Below are the steps that are required to create a bulk data load dataflow in Amorphic.

Dataflow Specifications

-

Dataflow Name: Name of the dataflow to be used, an identifier of the dataflow. -

Process Type: Full Load, Change Data Capture (CDC) & Full Load and CDC- Full Load : This option simply migrates the data from your source database to your target database.

- Full load and CDC (Migrate existing data and replicate ongoing changes) : This option performs a full data load while capturing changes on the source. After the full load is complete, captured changes are applied to the target and henceforth.

- CDC only (Replicate data changes only) : In this option, only ongoing data changes are captured.

-

Target Location: Select the target where the data has to be migrated.Note- For Redshift target dataflows, the exported file will not include headers. This is the default behavior for the Redshift target dataflows.

- In contrast, for S3Athena target types, the file will include headers by default.

-

Sync To S3: Only applicable when the target location is selected to DWH, this option enables the user to choose whether data should be copied to S3 or not either for full-load or CDC related dataflows. For CDC type of dataflows to sync the data to S3, a schedule needs to be created in the schedules page after selecting this option as Yes. -

Data Format: Data Format is used to determine the target data format of a dataflow. For S3 and S3Athena target dataflows files will be created for the datasets in the format that is specified here. For DWH type of targets this field is used to determine the format of data to be exported to S3 when SyncToS3 is selected as "yes" and will be shown only if it is applicable for DWH. This is an optional field and defaulted to "csv" if not specified. -

CDC Start Time(Applicable only for CDC): Custom start time which is used as a starting point to capture the data from the source. -

CDC Stop Type: Custom stop type which is used as stopping point of the change data capture. Available options are Server Time & Commit Time -

CDC Stop Time: Custom stop time which is used as stopping time of the change data capture.NoteBoth CDC Stop Type & CDC Start Time are required to use the functionality of CDC stop time. This setting is only supported for DWH targets (i.e., Either AuroraMysql or Redshift)

-

Target Extra Connection Attributes(Optional): Extra connection attributes to be applied to target for data migration jobs. Please refer below documentation for the available extra connection attributes.- S3 Extra connection attributes

- AuroraMysql Extra connection attributes

- Redshift Extra connection attributes

NoteFor S3 target type of datasets Amorphic uses addColumnName=true;timestampColumnName=RecordModifiedTimeStamp;includeOpForFullLoad=true as ExtraConnectionAttributes. When user provides extra connection attributes in the option above then predefined settings will be overwritten, user have to make sure to add these flags when creating the dataflows to be in sync with other data loads.

-

Source Extra Connection Attributes(Optional): Extra connection attributes to be applied to the source db for data migration jobs. Please refer below documentation for the available extra connection attributes.- Oracle connection attributes

- MySQL Extra connection attributes

- PostgreSQL Extra connection attributes

- Microsoft SQL Server connection attributes

- IBM Db2 (LUW) Extra connection attributes

NoteAdditional source connection attributes can only be added within the DataflowConfig attribute while edit payload through API. Below is an example for the same:

Source extra connection attributes sample json{

"SourceExtraConnectionAttributes": "parallelLoadThreads=5;maxFileSize=512"

}

Dataset Specs

-

Create Datasets for each table: Set this option to True to create new datasets as the targets. Disable this option to select existing Amorphic datasets as the targets for each source table. This setting applies on a per-dataflow basis and affects all selected source tables. For more details, refer to the Ingestion Configuration section below. -

Target Table Preparation Mode: To indicate how to handle loading the target at load startup. Available options are Truncate & Append, "Truncate" will delete the data in the dataset before load while "Append" will add the data to existing.NoteThis is an optional field. If the user opts to select existing Amorphic datasets instead of creating new ones during dataflow creation and no option is selected for this attribute, it will default to Append in the backend.

Replication Configuration

1. Dataflow by using on-demand instances

-

Use Serverless Replication: When a user wants to utilize instance-provisioned data migration, proceed with this feature disabled (it is disabled by default). If your workload is predictable, instance types may offer cost benefits. -

Use Shared Instance: If a shared instance should be used for a dataflow then this option should be selected as Yes and a drop down appears where user can selected one of the shared instaces that are available for the connection. This is an optional field and will be defaulted to No if no option is specified. -

Replication Instance AZ: Availability zone to be used to launch the replication instance. When an AZ is selected, all the available instance classes in that AZ will be shown and one can be selected from the list. -

Replication Instance Class: Type of DMS instance class to be used for the data migration (When user selects the instance here then backend instance selection will be nullified). Please choose approx Instance based on the data volume of all the tables. -

Allocated Storage: Amount of storage space you want for your replication instance. AWS DMS uses this storage for log files and cached transactions while replication dataflows are in progress. -

DmsVersion: This setting is used for the creation of DMS replication Instance, if no value is provided by the user then Amorphic picks the latest non-beta version that is available in that AWS region. This setting is applicable to all dataflow types. This parameter is optional, and users can update it as well.NoteBoth the 'Allocated Storage' and 'Replication Instance Class' parameters are required to use the instance setting provided by the user for the DMS dataflow, if above two parameters are defined then Approx Table Size parameter that was selected in the table metadata page will not have any affect and Amorphic uses the instance setting provided by the user else instance config is decided based on the aggregate sum of all Table Sizes in the dataflow.

2. Serverless replication

-

Use Serverless Replication: To access serverless functionality, the user should select this option as Yes. The Serverless replication feature eliminates replication instance management dataflows like capacity estimation, provisioning, cost optimization, and managing replication engine versions etc. Choose serverless for unpredictable migrations, as well as for simplicity and ease of use. This can scales automatically based on your workload. Keep in mind that if your workload is predictable, on-demand instance types may offer cost benefits. -

Max Capacity Units: Maximum DMS Capacity Units (DCU) that AWS DMS can provision, depending on your replication's capacity prediction. This is also the maximum DCU that autoscaling could scale up to -

Min Capacity Units: Minimum number of DCU that AWS DMS will provision. This is also the minimum DCU that autoscaling could scale down to. -

MultiAZ: Setting this optional parameter creates a standby replica of your replication in another Availability Zone for failover support. -

ReplicationAZ: Availability zone to be used to launch the serverless replication.

All other attributes remain the same as dataflows with on-demand instances. However, there are some limitations with serverless replication. Notably, serverless dataflows do not support Views in selection and transformation rules.

Table(s) selection

- Select all the tables that are needed to be loaded into Amorphic.

- Schemas and tables can be filtered if necessary with the filter on the top.

- After selecting the tables, click on Next which is at the end of the page to proceed with the metadata edit/update.

Ingestion Configuration

The user can configure data ingestion in the dataflow with two options: 'Create Datasets' set to true or false.

1. Dataflows Involving the Creation of New Datasets

-

Informations in this page will be used as metadata to register the datasets in Amorphic.

Dataset Name: This option is used to edit the Dataset Name's in bulk by adding prefix/suffix to the generated names.Dataset Description: Edit the generated description by using this option.Approx Table Size: This parameter is used to determine the type of Instance to be used while running the data migration and has nothing to do with the metadata of the dataset. Please select approx size of the source table with this parameter so that instance can be decided accordinglyDomain: Edit domain for the datasets.Keywords: Edit Keywords for the datasets.Is Spatial Dataset: Enable this option to treat the dataset as spatial. When turned on, the user must configure spatial settings for the dataset.Spatial Columns: Select the source columns that contain spatial data.CRS Column: Select the source column that contains the CRS (Coordinate Reference System) value for the dataset.

Note- Spatial configuration is currently supported only for SQL Server as the source, with Redshift or S3 Athena as target location.

- To display maps correctly, select valid spatial and CRS columns. This configuration can be updated later from the dataset page.

2. Dataflows Using Existing Amorphic Datasets

Dataset Name: This option is used to select the dataset in Amorphic where the data needs to be ingested. User can select the datasets based on selected target location. For example, If user selects S3 as target location then only S3 datasets are applicable. For S3 and S3Athena datasets, only append-type datasets can be selected, while for Redshift datasets, both append and update (latest record) types can be selected.Size: This parameter is used to determine the type of Instance to be used while running the data migration and has nothing to do with the metadata of the dataset. Please select approx size of the source table with this parameter so that instance can be decided accordingly

- Users should ensure they select the correct dataset when using CreateDataset as False for the Dataflows.

-

In case of S3 target location whole schema can also be selected instead of individual selection.

-

Bulk Edit:

- Bulk Edit is used to update/edit metadata of the datasets instead of editing/updating the pre-filled metadata for each dataset

-

Enable Filters, Transforms: This option will enable additional config that can be applied either on the source tables or on the target data while running the dataflow and during the data ingestion.Filter & Transformation Rules

-

Filter rules are used to filter the data while ingesting the data to Amorphic. Below are the rules that are supported during ingestion.

- Equal to

- Greater than or Equal to

- Less than or Equal to

-

Transformation rules are used to modify the column names or datatype of the table while ingesting the data to target, below is the list of transformation rules that are available.

- Rename To

- Remove Column

- Make UpperCase

- Make LowerCase

- Add Prefix

- Remove Prefix

- Replace Prefix

- Add Suffix

- Remove Suffix

- Replace Suffix

- Change Data Type (Only Integer, Double & String data types are available for conversion now)

- Add Column

- Randomize Digits

- Hashing Mask

- Mask Digits

- Capture Old Values

Below is the sample table which illustrates the column names that will be created in Amorphic when Transformation rules are applied on the columns. An example name of column_name is used to for all the rules.

Rulename Old value New value Result Rename To N/A new_column_name new_column_name Remove Column N/A N/A N/A Make UpperCase N/A N/A COLUMN_NAME Make LowerCase N/A N/A column_name Add Prefix N/A prefix prefix_column_name Remove Prefix column/COLUMN N/A name Replace Prefix column col col_name Add Suffix N/A _suffix column_name_suffix Remove Suffix _name/_NAME N/A column Replace Suffix _name _nm column_nm Change Data Type N/A N/A Datatype changes Add Column N/A new_column_name new_column_name Randomize Digits N/A N/A generate_random_digits Hashing Mask N/A N/A hashing_the_values Mask Digits N/A * masked_digits Capture Old Values N/A old_[column_name]_backup old_column_backup

-

For detailed examples and step-by-step configuration of all transformation rules, see our JDBC Bulk Data Load Transformation Rules documentation.

When multiple transformation rules are applied on a single column then AWS will consider only the first rule that is applied and rest others are ignored. Incase if multiple transformation rules needed to applied on a column then consider using Rename Column Transformation rule

Please follow the below animation as a reference to create a dataflow.

After successful creation, the dataflow can be started with the 'Start' option

View Dataflow Details

Once the dataflow is started, the status of the dataflow will be changed to running and the latest status can be fetched with the page refresh page.

Entity configuration can be changed for a running dataflow if required. Editing an entity can only be done when the dataflow is in the Stopped state

Dataflow Stats, Executions and Logs

To view data migration statistics, simply click on the dataflow name in the dataflow details section. Users can also access logs by clicking the three dots in the top right corner. Please refer to the video below for a demonstration.

For Full-load type of dataflows an additional tab called Executions will be shown which gives the run history of the dataflow and their corresponding metrics.

Dataflow statistics can be downloaded using the Download Stats option from the more (three vertical ellipses) option in the dataflow details page.

Edit Payload

Edit payload is also which is used to add any custom table mapping rules that are not possible via UI. User can click on Edit payload option from the UI and can add the rules as required.

Below image shows where the Edit payload option is avaialble.

Additional configuration

Amorphic supports several advanced configuration options that can be applied to dataflows. These configurations are optional and will use default values if not specified by the user.

These advanced settings can be configured through the Edit Payload feature available in the user interface.

Users must add these configurations within the DataflowConfig attribute. Below is the list for the same:

-

TaskRetryConfig : If a dataflow fails for some reason in its first attempt then based on this configuration dataflow will be retried. This configuration can be applied only while edit payload through API and is applicable to full-load dataflows only. Below is the payload reference.

Add TaskRetryConfig to the payload

"TaskRetryConfig": {

"AutoRetry": <string> ("enable" or "disable" are the allowed values),

"MaxRetries": <Integer> (Allowed values are between 1 &3)

"RetryInterval": <Integer> (Allowed values are between 10 & 60)

}

Notes:-

RetryInterval is the metric in minutes, i.e. if user gives the input as 10 then dataflow waits for 10 mins before a restart

When "AutoRetry" is "disable" then "MaxRetries" & "RetryInterval" are not applicable -

AdditionalTaskSettings : Any additional settings can be applied to a dataflow with the help of this configuration. Below is the AWS documentation with the list of all available settings.

Below is an example payload where both TaskRetryConfig & AdditionalTaskSettings are applied on a dataflow

"TaskRetryConfig": {

"AutoRetry": "enable",

"MaxRetries": 2

"RetryInterval": 10

},

"AdditionalTaskSettings": {

"FullLoadSettings": {

"MaxFullLoadSubTasks": 8,

"CommitRate": 50000

}

Reload table/s in a Dataflow

Users can reload specific tables in a dataflow (both failed and succeeded tables) instead of re-running the entire dataflow. This feature is particularly useful when only certain tables need to be reprocessed. Amorphic supportes reload table/s in a dataflow via API only feature. Below is the API & the method to be used for reloading table/s in a dataflow.

/datasources/datasource_id/dataflows/id?action=reload-tables & PUT method

{

"ReloadTables": [

{"SchemaName": "schema1", "TableName": "table1"},

{"SchemaName": "schema2", "TableName": "table2"},

{"SchemaName": "schema3", "TableName": "table2"},

.

.

.

]

}

Addtional Info

-

Only the configurations listed below can be edited during dataflow updates.

- TargetTablePrepMode

- DatasetDetails

- TableMappings

- SyncToS3

- DataFormat

- CdcStartTime

- CdcStopPosition

- TargetExtraConnectionAttributes

- TaskRetryConfig

- AdditionalTaskSettings

- SourceExtraConnectionAttributes

- SharedInstance

- InstanceConfig (InstanceMultiAZ, InstanceClass, AllocatedStorage, InstanceAZ, DmsVersion)

-

By default Amorphic assumes the My Data Files Have Headers setting as No for datasets when a dataflow is created. So, user must select the datasets (when CreateDataset as false) accordingly when creating a dataflow, else there will be a mismatch in counts between Redshit/AuroraMysql and S3Athena when SyncToS3 setting is enabled for a dataflow.

-

Dataflow Entity Migration is now supported, allowing dataflows to be moved from one entity to another for the Full Load + CDC and CDC-only process types when the dataflow is in the Stopped state.

If you are creating or updating a dataflow through the API and your transformation rules include complex queries, you must encode the TableMappings in base64 and provide the encoded value in the TableMappings attribute.