JDBC Bulk data load Transformation Rules

Overview

Transform your data during migration to meet your exact requirements without complex post-processing. Whether you're cleaning messy column names, adding business context, or preparing data for specific target systems, these transformation rules help you shape your data exactly as needed.

Source Table Reference

This source table contains common data challenges you'll encounter during migration: inconsistent naming conventions, mixed data types, legacy formats, and sensitive information requiring privacy protection. Each transformation rule below shows how to address these challenges systematically.

| order_id | first_name | last_name | h_product | AMOUNT | numeric_date |

|---|---|---|---|---|---|

| 1001 | John | Smith | Laptop | 999.10 | 20081988 |

| 1002 | Jane | Joseph | Mouse | 25.20 | 15061985 |

| 1003 | Bob | Johnson | Keyboard | 30.00 | 03121990 |

1. Ability to add columns with static values to the dataset

Add consistent metadata, tracking information, or business flags to every row during data migration without modifying your source system.

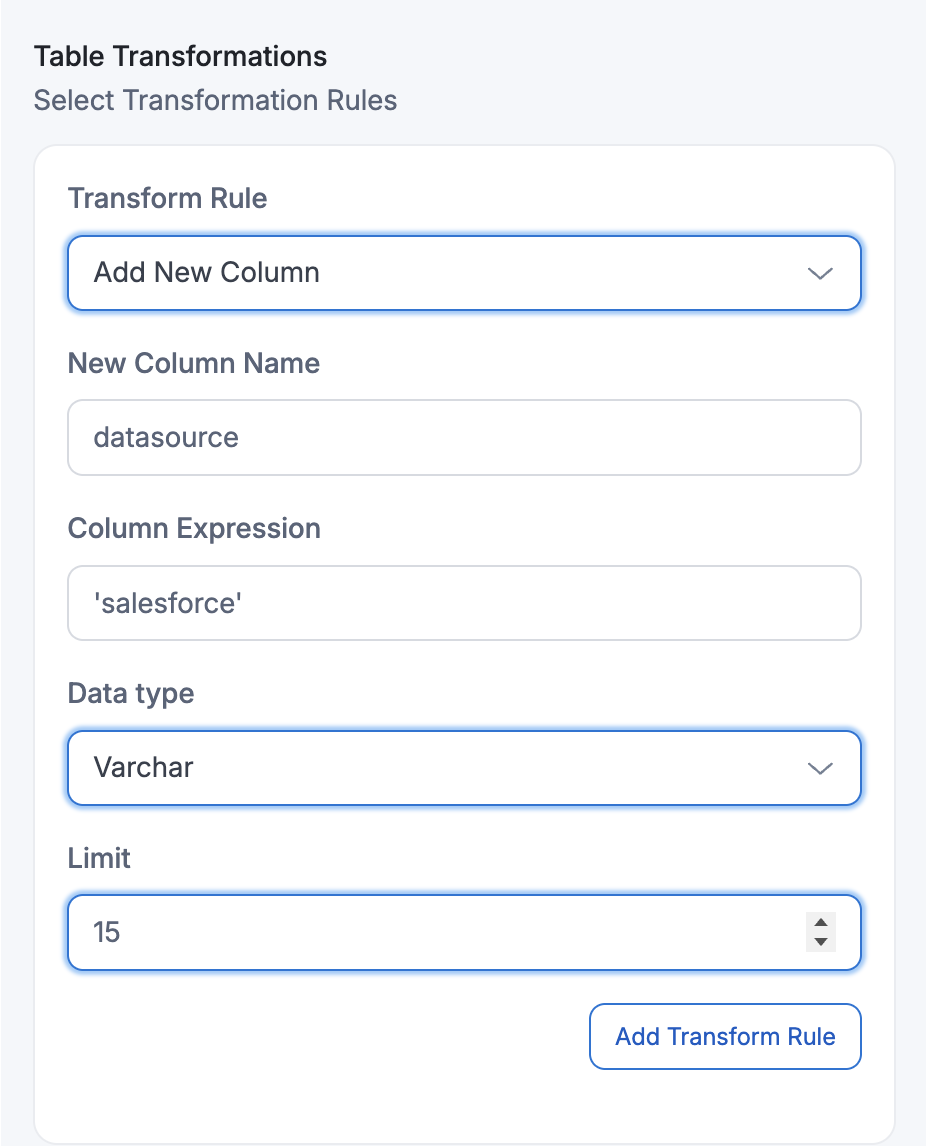

How to add this transformation in Amorphic:

Configure the "Add New Column" transformation by specifying the new column name and the static value that will be applied to every row during migration. Configuration:

- New Column Name:

data_source - Static Value:

salesforce

Result after applying transformation:

| order_id | first_name | last_name | h_product | AMOUNT | numeric_date | data_source |

|---|---|---|---|---|---|---|

| 1001 | John | Smith | Laptop | 999.10 | 20081988 | salesforce |

| 1002 | Jane | Doe | Mouse | 25.20 | 15061985 | salesforce |

| 1003 | Bob | Johnson | Keyboard | 75.00 | 03121990 | salesforce |

Output: Added 'data_source' column with static value "salesforce"

When defining multiple "Add New Column" transformations in the same migration, the behavior may vary depending on your source database. In some cases, it appears that only the first "Add New Column" rule in the sequence gets applied successfully, while subsequent rules might not be processed as expected.

2. Ability to add columns with dynamic values (e.g., ingestion timestamp)

Add automatically generated values like current timestamps, unique identifiers, or computed values to track when and how your data was processed.

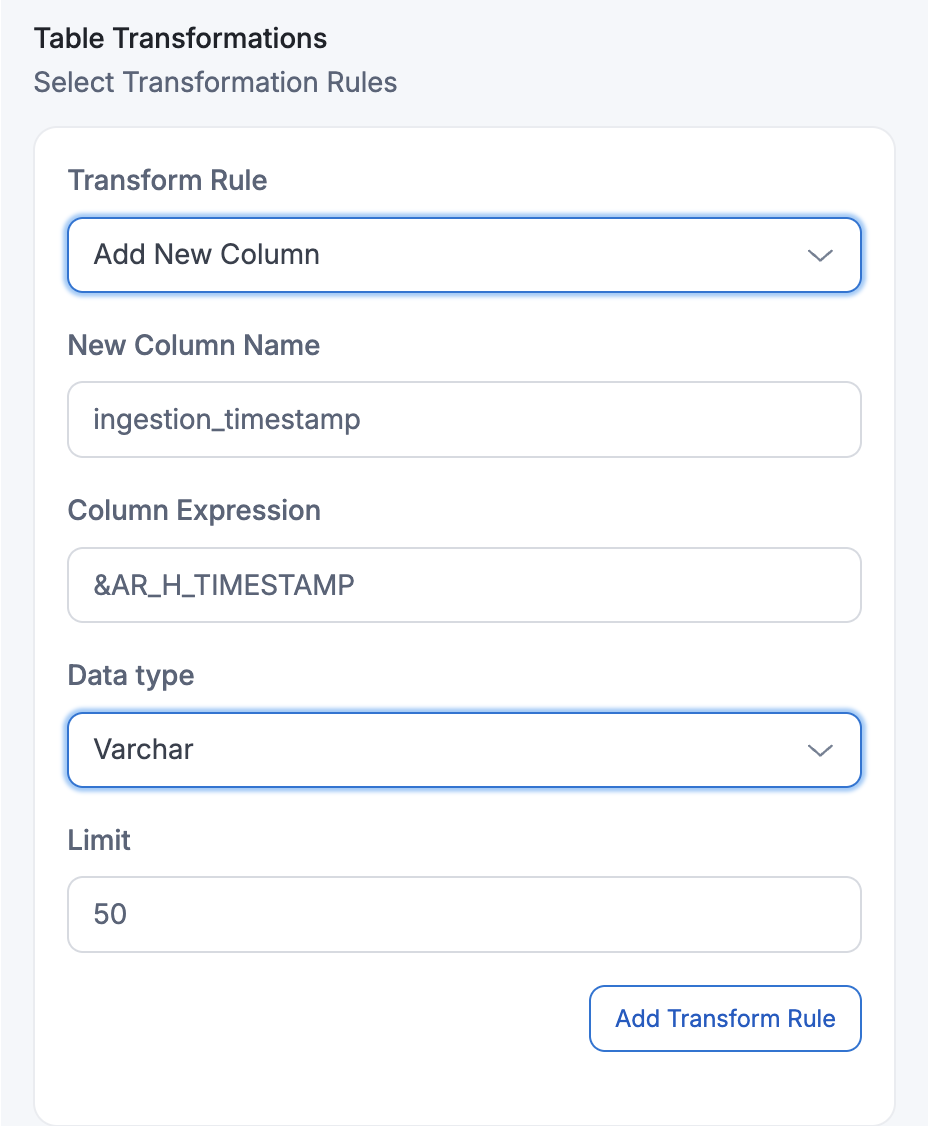

How to add this transformation in Amorphic:

Configure dynamic column generation by providing expression in 'Column Expression' to generate values during the migration process dynamically.

Example 1: Adding Timestamp Column

Configuration:

- New Column Name:

ingestion_timestamp - Dynamic Value: Current timestamp

Result after applying transformation:

| order_id | first_name | last_name | h_product | AMOUNT | numeric_date | ingestion_timestamp |

|---|---|---|---|---|---|---|

| 1001 | John | Smith | Laptop | 999.10 | 20081988 | 2024-01-15 14:30:25 |

| 1002 | Jane | Doe | Mouse | 25.20 | 15061985 | 2024-01-15 14:30:26 |

| 1003 | Bob | Johnson | Keyboard | 75.00 | 03121990 | 2024-01-15 14:30:27 |

Output: Each row gets the timestamp when it was processed during import.

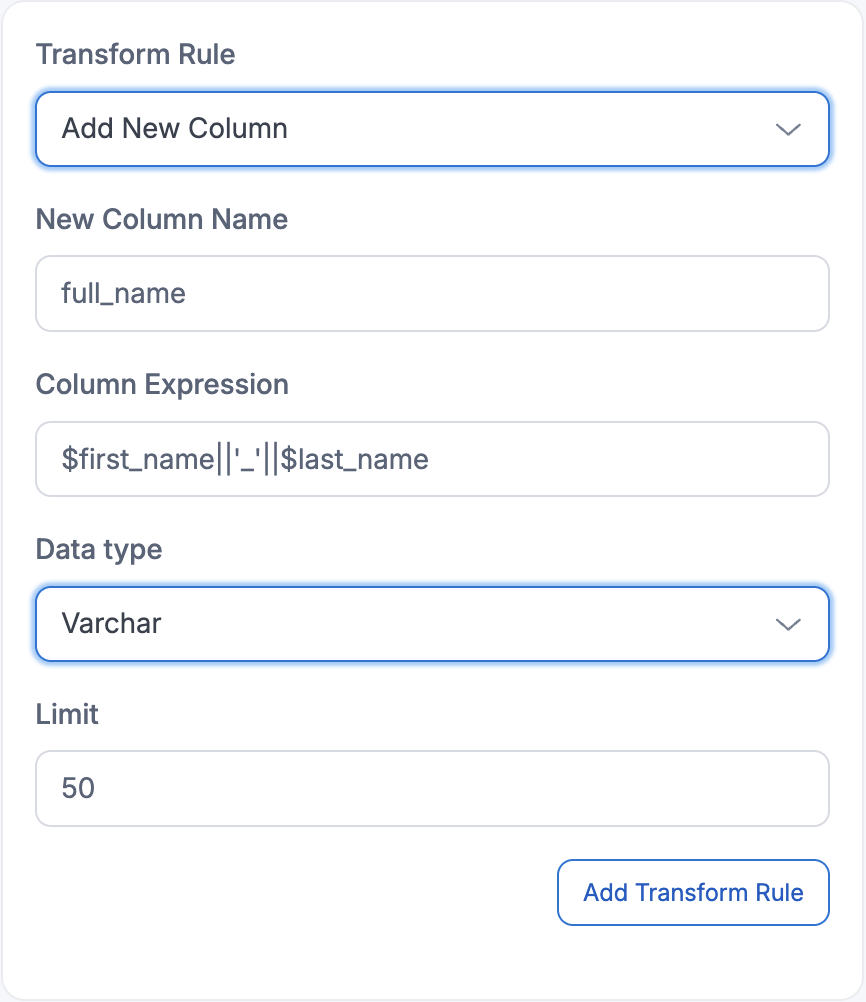

Example 2: Adding Column with Concatenated Values

Configuration:

- New Column Name:

full_name - Dynamic Value: Concatenation of

first_nameand thelast_nameof the source

Result after applying transformation:

| order_id | first_name | last_name | h_product | AMOUNT | numeric_date | full_name |

|---|---|---|---|---|---|---|

| 1001 | John | Smith | Laptop | 999.10 | 20081988 | John_Smith |

| 1002 | Jane | Doe | Mouse | 25.20 | 15061985 | Jane_Doe |

| 1003 | Bob | Johnson | Keyboard | 75.00 | 03121990 | Bob_Johnson |

Each row gets a new column that combines values from existing first_name and last_name columns with a space separator.

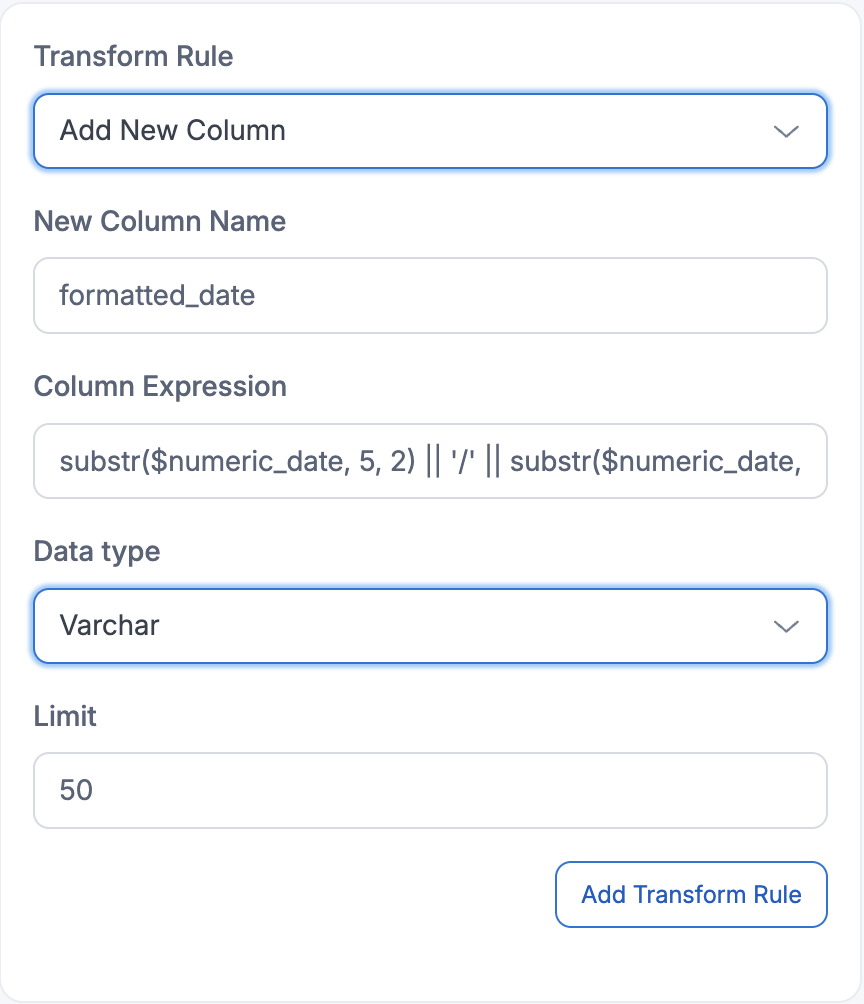

Example 3: Format column values using functions

Configuration:

- Target Column:

numeric_date(containing numeric values like 20081988) - Function: Convert numeric date to proper date format (MM/DD/YYYY)

Result after applying transformation:

| order_id | first_name | last_name | h_product | AMOUNT | numeric_date | formatted_date |

|---|---|---|---|---|---|---|

| 1001 | John | Smith | Laptop | 999.10 | 20081988 | 08/20/1988 |

| 1002 | Jane | Doe | Mouse | 25.20 | 15061985 | 06/15/1985 |

| 1003 | Bob | Johnson | Keyboard | 75.00 | 03121990 | 12/03/1990 |

The transformation function parsed the numeric format (DDMMYYYY) and reformatted it to MM/DD/YYYY

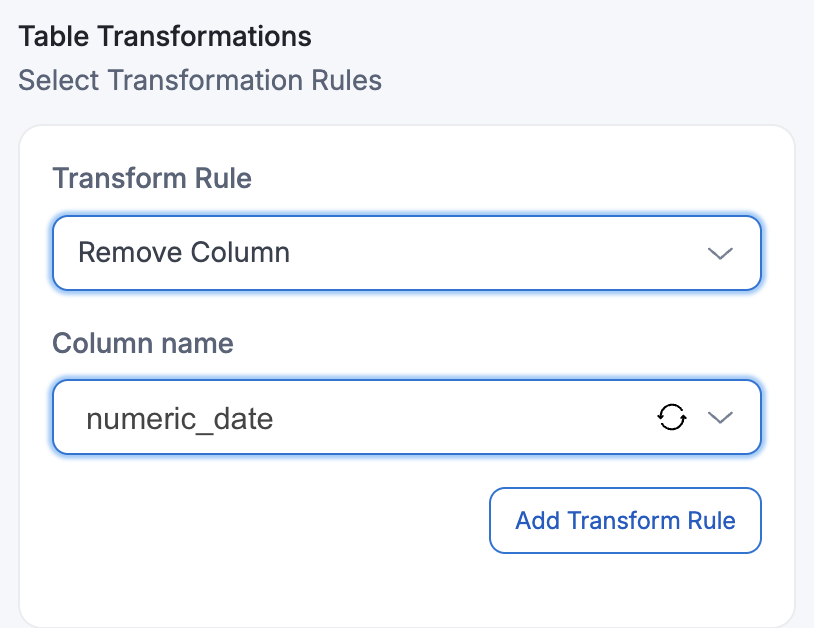

3. Ability to remove unwanted columns from the dataset

Remove unnecessary, sensitive, or redundant columns that don't need to be migrated to your target system, helping to reduce data volume and improve security.

How to add this transformation in Amorphic:

Configure the "Remove Column" transformation by selecting the specific column you want to exclude from the migration. This is useful for removing legacy data, sensitive information, or unnecessary fields that aren't needed in the target system.

Configuration:

- Target Column:

numeric_date(legacy date format no longer needed) - Transformation: Remove Column

Result after applying transformation:

| order_id | first_name | last_name | h_product | AMOUNT |

|---|---|---|---|---|

| 1001 | John | Smith | Laptop | 999.10 |

| 1002 | Jane | Doe | Mouse | 25.20 |

| 1003 | Bob | Johnson | Keyboard | 75.00 |

Output: The numeric_date column has been completely removed from the target dataset, reducing data volume and eliminating the legacy date format.

4. Column name standardization

Remove or replace special characters in column names to ensure compatibility and cleanliness

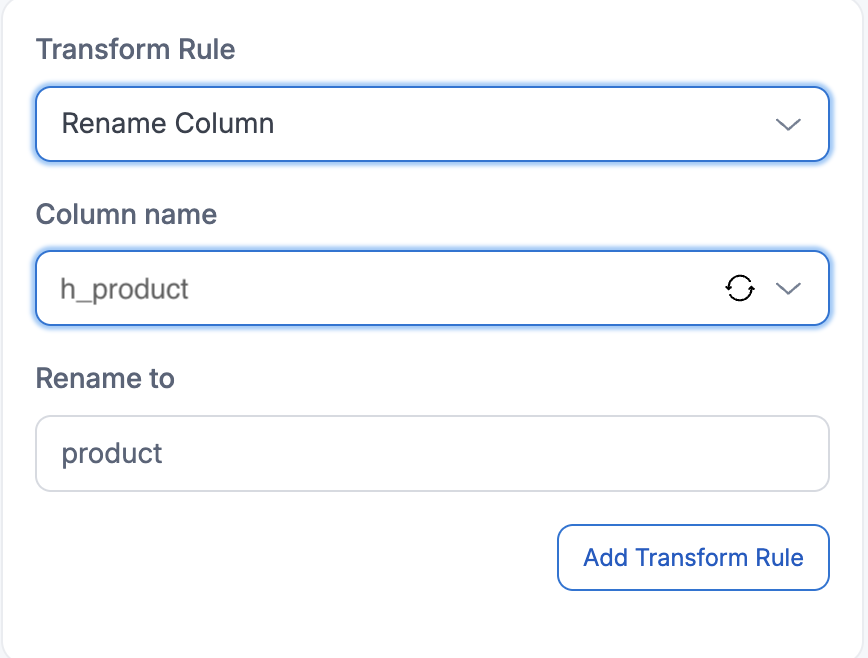

Example 1: Renaming the column

Completely rename columns that have special characters, spaces, or non-standard naming that needs to be replaced with clean, standardized names.

Configuration:

- Target Column:

h_product(contains some extra characters: 'h_') - Transformation: Rename to just

product

Result after applying transformation:

| order_id | first_name | last_name | product | AMOUNT |

|---|---|---|---|---|

| 1001 | John | Smith | Laptop | 999.10 |

| 1002 | Jane | Doe | Mouse | 25.20 |

| 1003 | Bob | Johnson | Keyboard | 75.00 |

The special characters (h_) were removed by using a completely new name: h_product was completely renamed to product

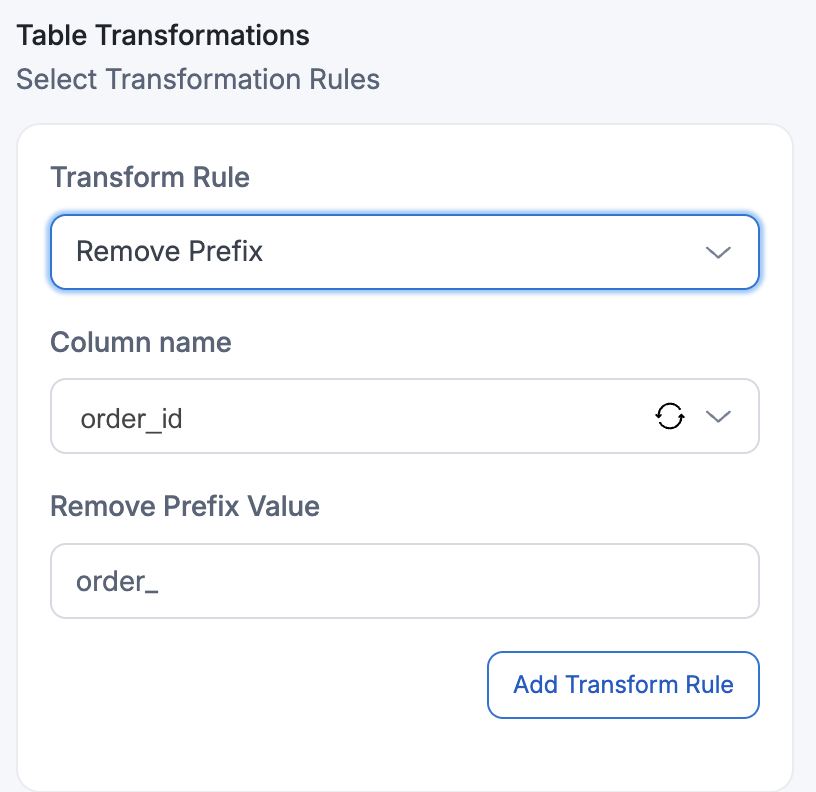

Example 2: Removing the prefix

Configuration:

- Target Columns: Column with

order_prefix - Transformation: Remove Prefix

Result after applying transformation:

| id | first_name | last_name | product | AMOUNT |

|---|---|---|---|---|

| 1001 | John | Smith | Laptop | 999.10 |

| 1002 | Jane | Doe | Mouse | 25.20 |

| 1003 | Bob | Johnson | Keyboard | 75.00 |

- The "order_" prefix was removed from the column name:

order_idbecameid

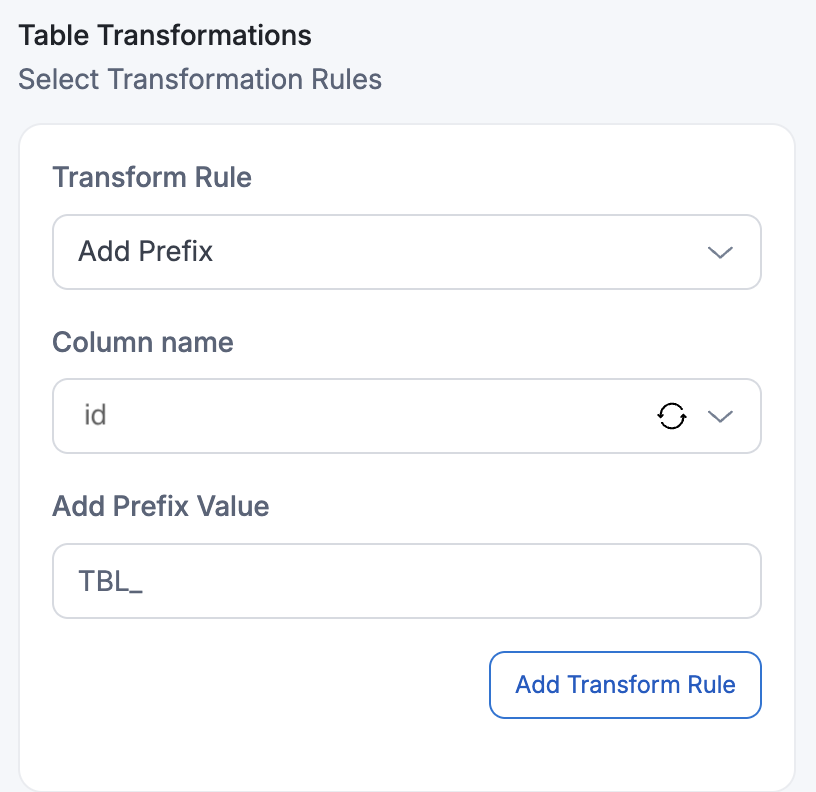

Example 3: Adding prefix to column names

Configuration:

- Target Column:

id - Transformation: Add Prefix value:

TBL_

Result after applying transformation:

| TBL_id | first_name | last_name | product | amount |

|---|---|---|---|---|

| 1001 | John | Smith | Laptop | 999.10 |

| 1002 | Jane | Doe | Mouse | 25.20 |

| 1003 | Bob | Johnson | Keyboard | 75.00 |

Output: The prefix "TBL_" was added to the column name: id became TBL_id

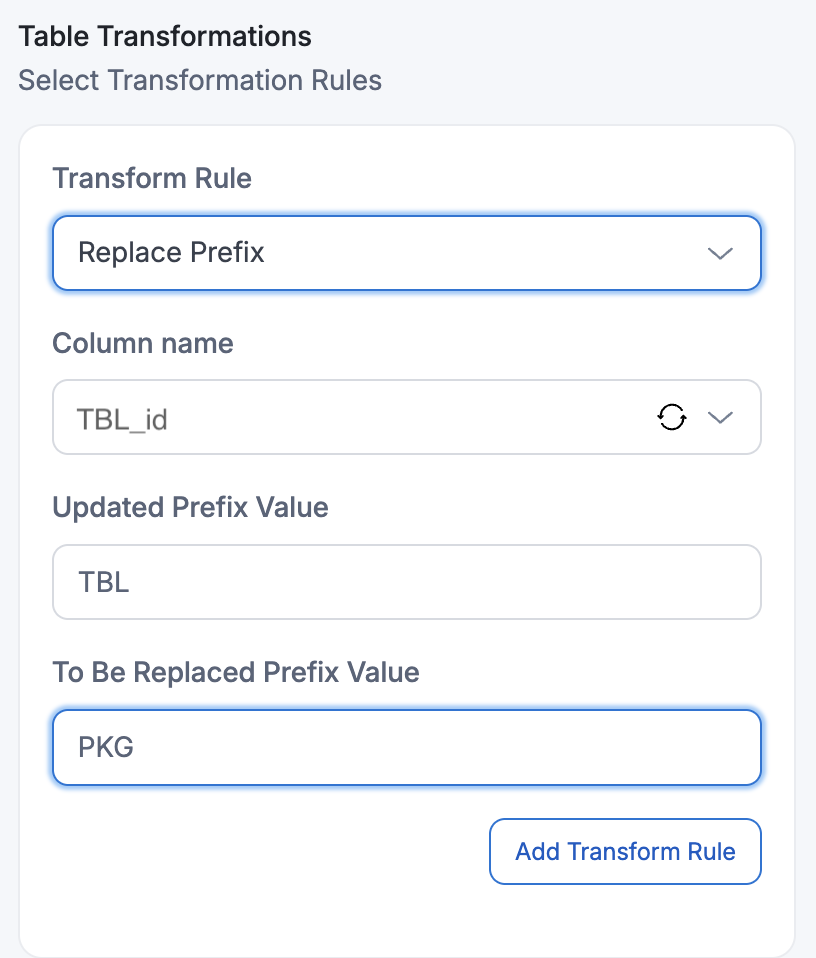

Example 4: Replacing prefix in column names

Configuration:

- Target Column:

TBL_id(column with existing prefix) - Transformation: Replace Prefix

TBL_withPKG_

Result after applying transformation:

| PKG_id | first_name | last_name | product | amount |

|---|---|---|---|---|

| 1001 | John | Smith | Laptop | 999.10 |

| 1002 | Jane | Doe | Mouse | 25.20 |

| 1003 | Bob | Johnson | Keyboard | 75.00 |

Output: The prefix was replaced: TBL_id became PKG_id

Example 5: Add/Remove/Replace suffix operations

Add/Remove/Replace suffix operations follow the same approach as the prefix transformations shown above.

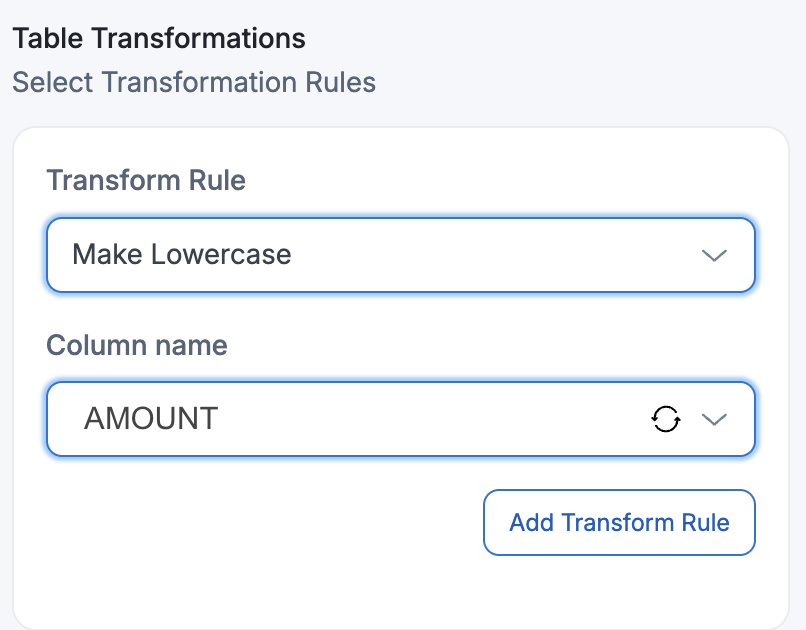

5. Convert column names to lowercase

Standardize column naming by converting uppercase or mixed-case column names to lowercase format for consistency and compatibility with target systems that prefer lowercase naming conventions.

How to add this transformation in Amorphic:

Configure the "Convert to Lowercase" transformation by selecting the target column that needs to be converted from uppercase to lowercase format.

Configuration:

- Target Column:

AMOUNT(currently in uppercase) - Transformation: Make Lowercase

Result after applying transformation:

| PKG_id | first_name | last_name | product | amount |

|---|---|---|---|---|

| 1001 | John | Smith | Laptop | 999.10 |

| 1002 | Jane | Doe | Mouse | 25.20 |

| 1003 | Bob | Johnson | Keyboard | 75.00 |

Output: The AMOUNT column name was converted to lowercase: AMOUNT became amount

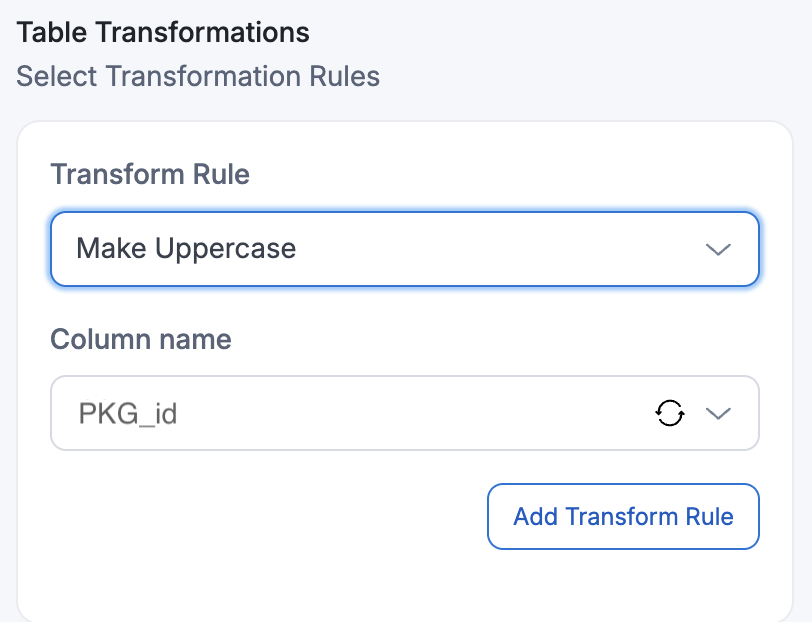

6. Convert column names to uppercase

Standardize column naming by converting lowercase or mixed-case column names to uppercase format for systems that require or prefer uppercase naming conventions.

How to add this transformation in Amorphic:

Configure the "Convert to Uppercase" transformation by selecting the target column that needs to be converted from lowercase to uppercase format.

Configuration:

- Target Column:

PKG_id(currently in lowercase) - Transformation: Make Uppercase

Result after applying transformation:

| PKG_ID | first_name | last_name | product | amount | numeric_date |

|---|---|---|---|---|---|

| 1001 | John | Smith | Laptop | 999.10 | 20081988 |

| 1002 | Jane | Doe | Mouse | 25.20 | 15061985 |

| 1003 | Bob | Johnson | Keyboard | 75.00 | 03121990 |

Output: The id column name was converted to uppercase: PKG_id became PKG_ID

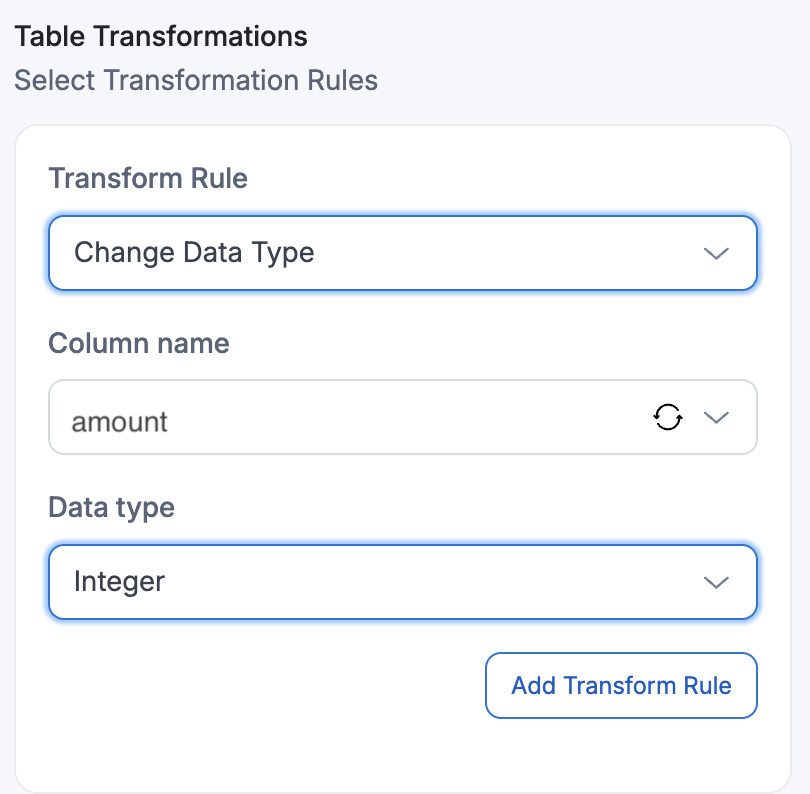

8. Change data type of columns

Convert column data types to match target system requirements, ensuring compatibility and proper data handling during migration.

How to add this transformation in Amorphic:

Configure the "Change Data Type" transformation by selecting the target column and specifying the new data type you want to convert to.

Configuration:

- Target Column:

amount(currently stored as string/text) - Transformation: Change Data Type into

integer

Result after applying transformation:

| PKG_ID | name | last_name | product | amount |

|---|---|---|---|---|

| 1001 | John | Smith | Laptop | 999 |

| 1002 | Jane | Doe | Mouse | 25 |

| 1003 | Bob | Johnson | Keyboard | 75 |

Output: The amount column data type was changed from string/text to integer for proper numeric operations

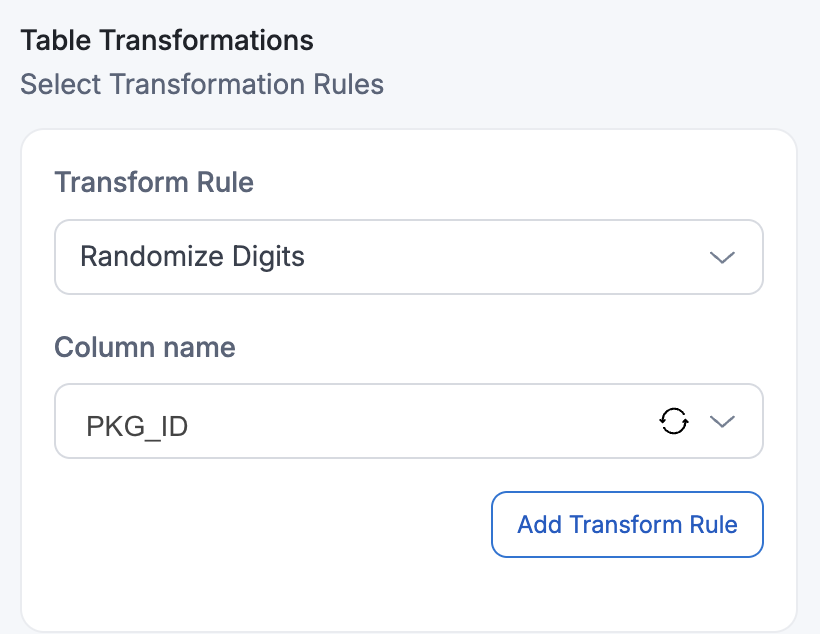

9. Randomize digits for data anonymization

Randomize numeric values in specified columns to create anonymized datasets while preserving data format and structure for testing and development purposes.

How to add this transformation in Amorphic:

Configure the "Randomize Digits" transformation by selecting the target column containing numeric values you want to randomize.

Configuration:

- Transformation: Randomize Digits

- Target Column:

PKG_ID(contains ID numbers to randomize)

Result after applying transformation:

| PKG_ID | name | last_name | product | amount |

|---|---|---|---|---|

| 7382 | John | Smith | Laptop | 999 |

| 9156 | Jane | Doe | Mouse | 25 |

| 4729 | Bob | Johnson | Keyboard | 75 |

Output: The PKG_ID column values were randomized from their original values (1001, 1002, 1003) to random numbers (7382, 9156, 4729)

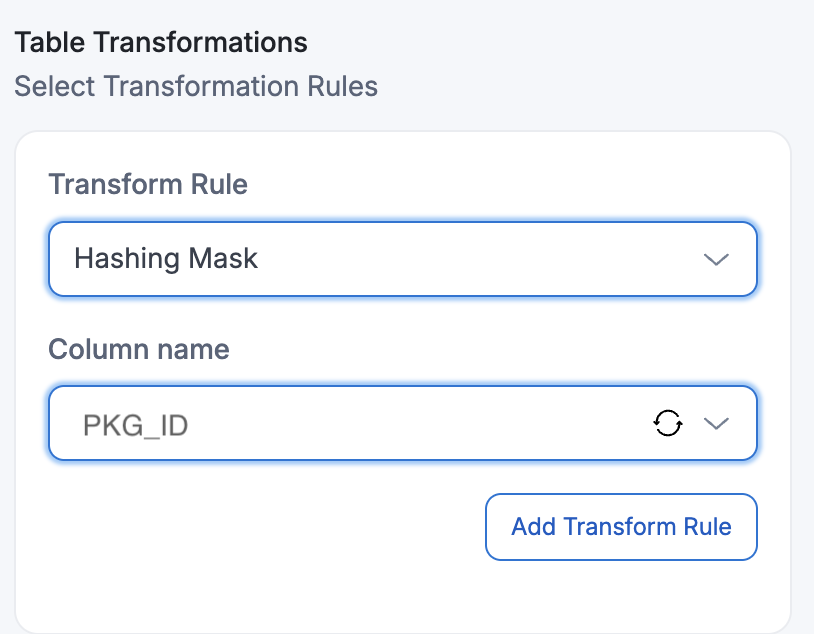

10. Hashing mask for data security

Apply cryptographic hashing to sensitive data columns to create irreversible masked values while maintaining data uniqueness for analytics and testing purposes.

How to add this transformation in Amorphic:

Configure the "Hashing Mask" transformation by selecting the target column containing sensitive data you want to hash.

Configuration:

- Transformation: Hashing Mask

- Target Column:

PKG_ID(contains sensitive ID numbers to hash)

Result after applying transformation:

| PKG_ID | name | last_name | product | amount |

|---|---|---|---|---|

| a1b2c3 | John | Smith | Laptop | 999 |

| d4e5f6 | Jane | Doe | Mouse | 25 |

| g7h8i9 | Bob | Johnson | Keyboard | 75 |

Output: The PKG_ID column values were converted to cryptographic hashes (7382 → a1b2c3, 9156 → d4e5f6, 4729 → g7h8i9) for secure data masking

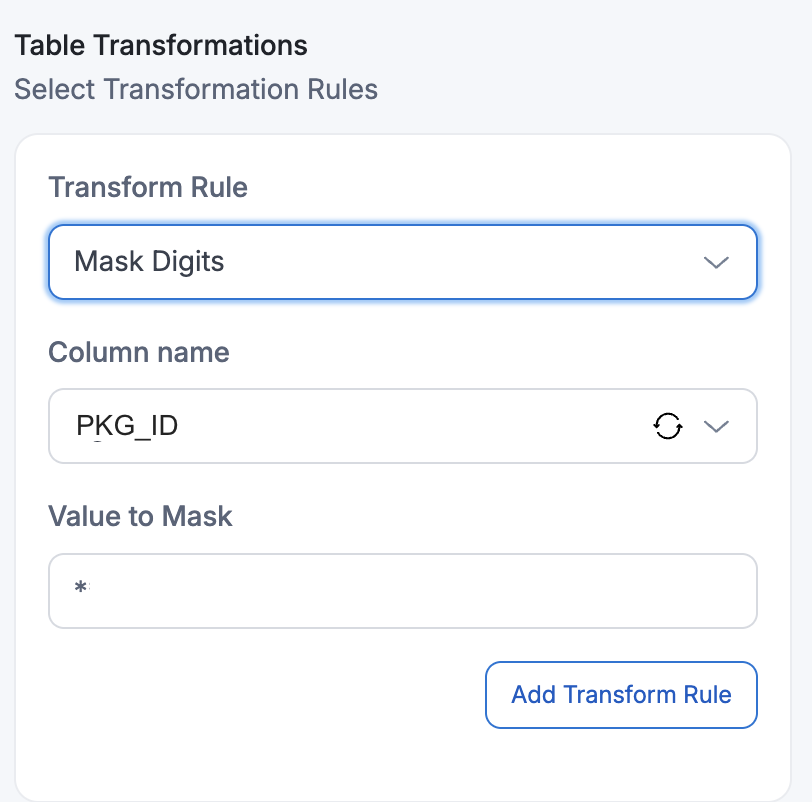

11. Mask digits for data privacy

Mask sensitive numeric data by replacing specific digits with mask characters to protect privacy and comply with data protection regulations.

How to add this transformation in Amorphic:

Configure the "Mask Digits" transformation by specifying the target column and the masking value/pattern you want to apply.

Configuration:

- Transformation: Mask Digits

- Target Column:

PKG_ID(contains randomized ID numbers to mask) - Value to Mask:

*

Result after applying transformation:

| PKG_ID | name | last_name | product | amount |

|---|---|---|---|---|

| ****** | John | Smith | Laptop | 999 |

| ****** | Jane | Doe | Mouse | 25 |

| ****** | Bob | Johnson | Keyboard | 75 |

Output: The PKG_ID column values were masked with * to protect the hashed identifier information

- Using any of the Masking rules for a column with an incompatible data type will cause an error in the dataflow. You may add a change-data-type transformation rule action to change the data type of the column to a compatible type if your source column type is not supported for the masking option you plan to use.

- The rule-id of the change-data-type transformation should be a smaller number than the rule-id of the masking transformation so that the data type change happens before masking.

- The masking value requires a single non-empty, non-whitespace, printable ASCII character except disapproved characters [\, --, \n, ", \b, \r, ', \t, ;]

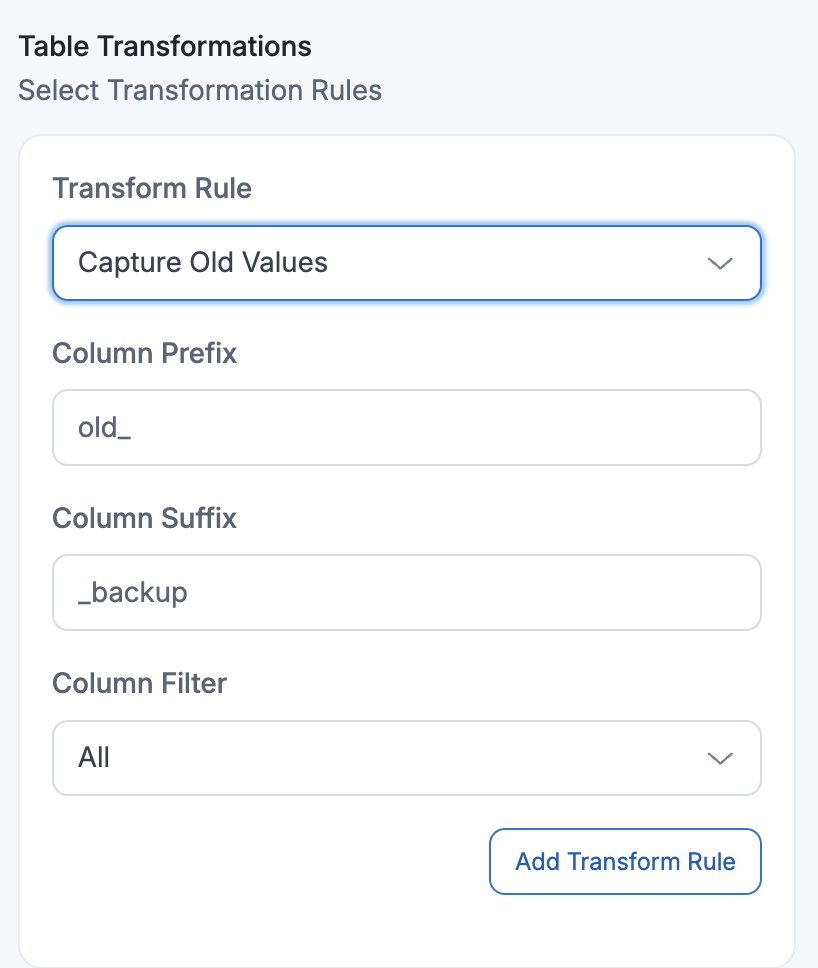

12. Capture old values for change tracking

Create corresponding columns with prefix and suffix to store old values before updates occur. This transformation is essential for change data capture scenarios where you need to track what values were changed during updates.

How to add this transformation in Amorphic:

Configure the "Capture Old Values" transformation by specifying the prefix and suffix that will be added to create new columns for storing previous values.

Configuration:

- Transformation: Capture Old Values

- Column Prefix:

old_ - Column Suffix:

_backup

Result after applying transformation:

| PKG_ID | name | last_name | product | amount | old_PKG_ID_backup | old_name_backup | old_last_name_backup | old_product_backup | old_amount_backup |

|---|---|---|---|---|---|---|---|---|---|

| **** | John | Smith | Laptop | 999 | 1001 | John | Smith | Laptop | 999 |

| **** | Jane | Joseph | Mouse | 25 | 1002 | Jane | Doe | Mouse | 25 |

| **** | Bob | Johnson | Keyboard | 30 | 1003 | Bob | Johnson | Keyboard | 75 |

Output: New columns were created for each source column using the pattern old_[columnname]_backup to capture original values before source table updates.

This transformation rule is only applicable for Dataflows configured as:

- FullLoad + CDC (Change Data Capture)

- CDC only

- This rule will NOT work with FullLoad only dataflows, as there are no change events to capture old values from.